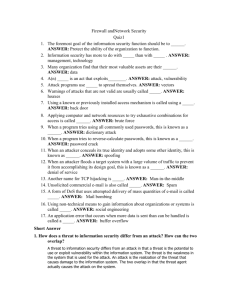

Information Security Risk Management: In Which

advertisement