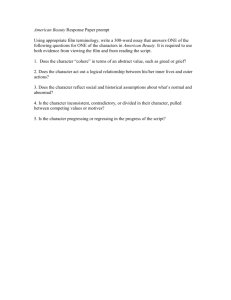

UNIX scripting (and Cyberquery) Toolbox

advertisement

UNIX shell scripting (and Cyberquery) Toolbox

Array User,

This document contains instructions on how to write UNIX shell scripts.

Although it is basic enough for the beginner, it is also full of examples

and tips for both UNIX and CQ that may be useful to the more advanced

shell script writer as well.

Use this document, but also remember to give back to the Array User

Group community by sharing your shell scripts. Enjoy…

Why learn UNIX shell scripting?

Where can I learn more about UNIX scripting?

Books

/tss/macros/

Array User Group (AUG)

Internet

Man pages

Build a UNIX script, step-by-step

Start with your PATH

Creating a script with vi

All the vi commands you will ever need

Use your PC to copy and paste with vi

Making a script executable

Making an example script

Troubleshooting your scripts

What happens when a script fails

Using the shell –x option

Troubleshooting tips

Build a CQ macro file

Using collections

Using parameter files

Using genrec.cq for multi-passes in a macro

Using CLI within a CQ .mf macro

A few CQ tips

Using a genrec.cq file for multi-pass queries

Creating a zero-length report.

Using email

mailx vs. mail command

email to individual(s) automatically

email attachments automatically

email using .forward files

script to fix owner of .forward files

email /etc/alias file

email zero file size

email using a script from a parsed CQ report

UNIX shell scripting basics

Redirection

Quotation marks

Variables

Loops ( for loop, while loop, until loop )

Testing for conditions

Test utility operator table

Using if, then, else, elif, and case statements

Controlling errors

Catching and controlling errors in a CQ .mf macro

Catching and controlling errors in a UNIX shell script

Checking the previous command for errors.

Automating your shell scripts

Modifying the cron daemon

Examples of cron jobs

Modifying your CQJOB.SH nightly jobstream script

Kicking off CQJOB.SH with cron

Examples of time-dependent scripts within CQJOB.SH

Making and compiling a Cobol script to ‘99’ from Array

Connecting to a different machine automatically

Retrieving PFMS from Trade Service Corp.

Retrieving part override report from TPW

A full-blown example of shell scripting and CQ working together:

A complete sales order aging subsystem

Overview of the order aging system.

Script to move previous day’s files

Capturing all allocated items with CQ

Scripts for certain business days

One last management tip

Why learn UNIX scripting?

Using UNIX scripting and CQ together, you will be able to automate

many tasks – not just for your own (IT) benefit, but for your entire

company. Learning to put the tools together will allow you to have a

freedom in development that will exceed your expectations.

CQ alone has limitations. Because CQ is a report writer, information can

be extracted, but combining queries or making decisions about what to do

with the information is beyond CQ. Likewise, UNIX scripting has

limitations, the primary one being that it does not (easily) access your

data. However, the combination of CQ and UNIX scripting is incredibly

powerful, and combined they can produce the type of functionality that

only high-level programming languages can achieve – but much easier

than learning another programming language.

You will probably discover that you ALREADY know UNIX scripting

because you have been exposed to UNIX utilities, and for the most part

scripting is just stringing together UNIX utilities. If you know

Cyberquery you have already conquered the hard part! Now all you have

to do is take that knowledge and make it automatic.

Where can I learn more about UNIX scripting?

The first hurdle to get over when writing a script is that the system gives

you absolutely no help. You are faced with a blank screen and you just

type in the commands that magically jump out of your fingers. One of

the books recommended below calls UNIX “the ultimate user-hostile

environment”. Obviously you are going to need some outside help. Here

are some places to learn:

Books:

Good old-fashioned books are the best source by far. You may need

several of them sitting on your bookshelf as handy reference because

they usually perform different functions. Here are two

recommendations, but there are many others not listed here that are

just as worthy.

Title:

By:

UNIX Made Easy

1,061 pages, $34.95

John Muster and Associates.

This is a good one to begin with, but is also

good for the expert. It focuses on each utility

and goes into excruciating detail on each,

showing exactly how each one works step-bystep. If you are going to buy just one book,

then this is the one.

Title:

By:

UNIX Complete

1,006 pages, $19.99

Peter Dyson, Stan Kelly-Bootle, John Heilborn

This is a good book for looking up a utility and

how it works – kind of like small, easy-to-read

man pages. Even if you have UNIX Made

Easy you may want this one as a kind of second

opinion. This book covers other UNIX flavors

than just AIX, so if you pick up a script from

the Internet or some non-AIX source, it is a

good place to look up utilities that are not part

of AIX.

Title:

UNIX Shell Programming (Revised Edition)

487 pages, $29.95

Stephen Kochan and Patrick Wood

This book is a good intermediate book that

covers the details of loops and other

programming techniques in an easy-to-read

textbook-like format.

By:

/tss/macros/

Trade Service Systems (TSS) keeps their working scripts in this

directory. Sometimes you can find a good one in here. Be careful

though – there are old scripts that no longer apply, scripts that never

worked right in the first place, etc. This is TSS’ workspace, and you

have to make sure that you know what a script does before you run

one or even copy it. One of the best uses for this directory is to check

to see how a utility is used. For example, this command will look for

all the places they use the “chmod” command:

$ grep chmod /tss/macros/*.sh | pg

One thing to stress: You are not paying TSS for UNIX scripting

support. It is not fair to TSS to look at their scripts and call them for

assistance to help you modify it. (Although the people in the

operations department are very nice.) Plan on achieving UNIX

scripting expertise without TSS.

Array User Group (AUG)

Send an email with your questions to the AUG group mail list. It is

amazing what other users will be willing to give you or help you

through. AUG is your best source of information and help – but

please don’t forget to give back to the AUG community. If you have

a particularly good script please post it back to the AUG site, or the

AUG email list.

Internet

There are many great sites, many of which have script examples on

their sites. Since this changes so much few are listed here, but don’t

neglect this huge source of information. Sites that are in the

educational domain (end in “.edu”) are sometimes more helpful, but

not necessarily. Take some time to search and bookmark a few sites

that you prefer – it is worth the effort. (tip: Keep a special eye out

for sites that will allow you to ask or post questions.) At the time of

this writing, a combined search for “UNIX”, “UNIX scripts” and

“shell scripts”, using the search engine Hotbot yielded 1,088 sites.

Many of them have integral help.

Here is a good one to start with that covers the basics very well:

http://www.cs.mu.oz.au/~bakal/shell/shell1.html

Here is one of my favorites:

http://www.oase-shareware.org/shell/

And of course, don’t forget this one:

http://www.tradeservice.com

man pages

The manual pages (man pages) on your system are a great source of

information. Especially since the thing you are looking up is usually

exactly which argument to use with a utility – finding the difference

between “grep –v” and “grep –n” for example. From the command line

enter

$ man grep

Building a UNIX script, step-by-step

The below instructions will walk you through the process of building a UNIX

script. The way this works is that the script will call a CQ macro and take

care of any error handling, the macro will call the CQ query. We will

actually build a complete working script/macro/query as the final step, in this

example, use CQJOB.SH to kick off the script in the nightly jobstream.

Start with your PATH

The PATH environment variable is what your UNIX machine uses to search

for the instructions and files it uses to execute a program. You will need a

separate PATH for every directory where you plan on keeping and running

shell scripts. Let’s assume that you are going to be working primarily in

your nightly jobstream directory. Chances are that this file is already on

your system /tss/macros/CQJOB.SH, and chances are that it is already

pointing to the /cqcs/jobstream/ directory. If this file is not there then you

may need TSS to put it there and give it their blessing.

Here is a sample:

PATH=$PATH:/cqcs:/cqcs/programs:/data13/live:/cqcs/jobstream; export

PATH

cd /cqcs/jobstream

Copy this path and make one that will permanently reside in your

/cqcs/jobstream/ directory. Name it path_jobstream.txt.

Whenever you want to start a new shell script issue this command as your

first step.

$ cat path_jobstream.txt > new_script.sh

You will probably want to modify (see next section on using vi) the

path_jobstream.txt file so that it looks like this.

#! /bin/ksh

##########################################################

#

#

#

##########################################################

PATH=$PATH:/cqcs:/cqcs/programs:/data13/live:/cqcs/jobstream;

export PATH

cd /cqcs/jobstream

#########################################################

########################### END #########################

Note that the script begins with a #! /bin/ksh. That means “use the Korn

shell” to UNIX. This will prevent you from accidentally invoking the wrong

shell when your script is executed. This file now has room in the box at the

top for you to type in the name of the script, what it does, date, your name,

etc. Whenever the shell sees the octothorpe (#) symbol it treats all that

follows as a comment.

One of the most common things you will do when writing a script is to print

out the script so you can stare at it and wonder why it isn’t working. Putting

the “## END ##” box in your file also makes it easy for you print a bunch of

your scripts at the same time and still be able to read them. Without the END

the scripts may appear to have no definite starting and ending point. To print

all your scripts from the /cqcs/jobstream directory:

$ lp –d01p1 /cqcs/jobstream/*.sh

(Substitute your print queue for the “01p1” part.)

Don’t remove the “cd /cqcs/jobstream” line – it is NOT excess. Depending

on how complex your scripts become, you may spawn sub shells that will be

in a different place when they are executed. Keep this line in for all scripts.

Remember, you must have a different path for each directory. All scripts that

reside in that directory should have that PATH. If you were to make a

directory called “/cqcs/new”, then you would change this part right here to

“:/cqcs/new”

PATH=$PATH:/cqcs:/cqcs/programs:/data13/live:/cqcs/jobstream;

export PATH

cd /cqcs/jobstream

Creating a script with vi

Here is the world’s shortest course on using the vi editor:

To create a new file or edit one that already exists type:

$ vedit newfile.txt

If the file “newfile.txt” does not exist one is created. If the file already

exists you will open it for editing.

Commands:

The first thing that you need to do is tell vi what mode you want to be in.

Here are the entire list of commands you will ever need in your entire

career. Note that the “<escape> <escape>” means to hit the escape key

twice.

<escape> <escape> i

<escape> <escape> a

<escape> <escape> x

<escape> <escape> dd

<escape> <escape> :q!

<escape> <escape> :w!

<escape> <escape> :wq!

Inserts typing BEFORE the cursor.

Appends typing AFTER the cursor.

Remove the character the cursor is on.

Deletes the line the cursor is on.

Quits without saving your work.**

Writes (saves) your work.**

Writes (saves) and quits.**

** Note that the colon (:) is required before and the bang (!)

is required after these commands.

There are whole books written on vi, but this list of commands will be all

you will probably ever need.

(Note: The advantage of using the “vedit” command is that the “novice”

option is enabled, meaning that the input mode message appears in the

lower right hand corner. If you do not want this feature then use the “vi”

command.)

You may want to copy the file for safety before editing it.

$cp filename.sh filename.sh.old

If you want to copy and paste a file from the Internet (or this document

for example) into your system you can do it without downloading the file.

Here is how:

1. Copy the text into your windows clipboard like usual.

2. Create your file

$ vedit newscript.sh

3. and get into the insert mode:

<escape> <escape> i

4. Now just paste your text like usual. It works 99% of the time.

The only problem this may cause is that sometimes a new line

appears in the middle of a command that does not like it. If

you have this happen to you your script will say something

like “new line not expected”.

Most IBM terminals and PC’s will let you use the arrow keys to move

around the document while using vi. If your arrow keys do not work

and you want to use the keyboard to navigate (instead of getting a

new PC) then you will need to use the <escape>h, <escape>j,

<escape>k, <escape>l keys. Get a book…

Making a script executable

What makes a script run is the fact that the system interprets the file as

something it can execute.

$ ls –la ch*

-rwxrwxrwx 1 prog users

516 Feb 21 1992 chdir.sh

-rwxrwxrwx 1 prog users

120 Aug 26 1994 check.sh

we know that the above scripts are both executable because the permissions

at the beginning of the listing show that they end in an “x”.

You can make any file executable by entering

$ chmod +x anyfile.txt or chmod 777 anyfile.txt

or remove execute permissions be entering

$ chmod –x anyfile.txt

If you are not the owner of the file (the name that appears in the third column

when you enter the ls –la command) then you might have to log in as root to

do this.

You can also execute any file as a shell script no matter what the permissions

are if you type “ksh” in front of the file name. This command may be

fussier about ownership than if the permissions are changed.

$ ksh anyfile.txt

Once a file is executable, you should be able to make it run just by entering

the name.

$ anyfile.txt

Sometimes the file will not execute no matter what. In that case try calling it

by its full pathname, or by entering a dot first.

$ /cqcs/jobstream/anyfile.txt

or

$ . /cqcs/jobstream/anyfile.txt

Making an example script

Lets make a file called example_script.sh in your /cqcs/jobstream directory

using the previous steps. Namely, making a PATH, and then editing with vi.

This is what it should look like:

#! /bin/ksh

##########################################################

# example_script.sh

#

# test script that proves I am a genius

#

today’s date, your name

##########################################################

PATH=$PATH:/cqcs:/cqcs/programs:/data13/live:/cqcs/jobstream;

export PATH

cd /cqcs/jobstream

echo “ $LOGNAME, you are a genius! Congratulations! ”

########################################################

###################### END #############################

For this example I am assuming we are already in /cqcs/jobstream/ and are

logged in as “jsmith”.

From the command line check for the permissions

$ ls –la example*

-rwxrwxrwx 1 jsmith users

643 Sep 21 2000

example_script.sh

OK, looks good, so let’s run it: (if it doesn’t run refer to making a file

executable.)

$ example_script.sh

jsmith, you are a genius! Congratulations!

There – your script just did what you told it to! Congratulations indeed!

Troubleshooting your script

Well, first let’s make a script that fails. Lets modify the previous script in the

example and give it a command we cannot execute. Specifically, let’s add

this line:

#! /bin/ksh

##########################################################

# example_script.sh

#

# test script that proves I am a genius

#

today’s date, your name

##########################################################

PATH=$PATH:/cqcs:/cqcs/programs:/data13/live:/cqcs/jobstream;

export PATH

cd /cqcs/jobstream

“Jimmy Hoffa”

# <<<< added this line

echo “ $LOGNAME, you are a genius! Congratulations! ”

########################################################

###################### END #############################

Let’s execute it again

$ example_script.sh

example_script.sh[11]: Jimmy Hoffa: not found.

jsmith, you are a genius! Congratulations!

Well, we see that the script kind of worked, but part of it failed. The system

interpreted the “Jimmy Hoffa” as a command on line 11 of the script and

returned the error message “Jimmy Hoffa: not found” when it came to that

part. OK, I stole this joke – but it still demonstrates that the script did run

OK but this part did not work.

What happens when a script fails

There are lots of ways that a script can fail. Sometimes the script works, but

doesn’t do what you want it to do, sometimes it exits and doesn’t finish the

rest of the script, etc. In addition to reading the portion regarding

troubleshooting also be sure to see the “controlling errors” section, which

goes into much more detail about how to handle errors with working scripts.

Troubleshooting using the shell –x option:

If we execute the same example_script with the ksh –x command we can

watch the commands being generated and what it puts out.

$ ksh -x example_script.sh

+

PATH=:/cqcs/jsmith:/cqcs:/cqcs/programs:/data13/live:.:/tss/macros:/l

ib

include:/etc:/bin:/usr/bin:/usr/sbin:/usr/local/bin:/usr/dbin:/usr/vsifax3

cqcs:/cqcs/programs:/data13/live:/cqcs/jobstream

+ export PATH

+ cd /cqcs/jobstream

+ Jimmy Hoffa

example_script.sh[11]: Jimmy Hoffa: not found.

+ echo jsmith, you are a genius! Congratulations!

jsmith, you are a genius! Congratulations!

What happens when using the –x option is that whenever the shell comes

across a line it interprets as a command, the system prints a “+” and the

command. Then it prints the output or result of that command if there is any.

(Note that there are no comments listed, because they are not commands.)

Note that not all commands have anything to output. The command “cd

/cqcs/jobstream” executed fine but nothing printed because when the “cd”

utility operates it just does it without saying anything unless something goes

wrong. If we wanted to make sure it worked we would probably put the

command “pwd” after the “cd” command so we could see what directory we

are in.

Note: If you notice the PATH is different it is because I executed this

command from the CLI utility in CQ, so the PATH expansion shows the

results of the PATH variable I have from that command line as well as the

explicit PATH I specified in the script itself.

Troubleshooting Tips

Here are some more miscellaneous troubleshooting TIPS to assist in

analyzing of errant scripts:

Be sure to save a copy…

By far the most frequent problem is typos. Read your work carefully.

Have you correctly referred to all files, etc.?

Add “sleep 2” above or below each command. This will slow down

your script long enough to read any output. (“2” is 2 seconds)

o Example: sleep 2

Send the errors only to an error file with the “2>” command.

o Example: example_script.sh 2> example_errors.txt

Add “echo” comments that tell what your script is about to do.

o Example: echo “next line is supposed to cd to my home

directory”

Echo variables after every command to see if the command changed

it.

o Example:

echo $variable

command

echo $variable

Comment out possible offending commands

o Example: # command

Especially when using the “rm” utility, the script may be too fast and

executes a removal before printing a file for example. Use the “wait”

or “sleep” commands.

Use the tee utility to record what flashes across your screen.

$ ksh –x testscript.sh | tee

what_in_the_hell_is_wrong_with_this_script.txt

Build a CQ Macro File

Many of your UNIX scripts will probably just consist of the following

line:

$ cq my_macro.mf

This command executes the program called “cq” in /cqcs/programs which

takes any file with a “.mf” extension and tries to run the commands

within the file. Much of the complexity will reside in your query itself,

and so executing the thing is the least of your worries.

You can create your macros directly from the CQ editor, the same way

that you write the queries. Here is an example of a macro that uses the

most common commands, with comments that explain the significance of

the command.

/**** COMMENTS: This is how comments are entered ******/

/**** They can also

span

more than 1 line *****/

Important: All these queries, macros, and scripts must be in the directory

that this macro resides!

If you are writing your queries and macros in

your own home directory (which is a good idea) then you will have to

copy them into your /cqcs/jobstream directory if you want them all to be

executed with the CQJOB.SH script.

/************************************************

RSAVE VS RREPLACE IN A CQ MACRO:

The below query is run and the report is saved as queryname.cq Note

that no .eq or .cq extensions are used. The problem with “rsave” is

that if the report already exists the macro will fail. The report name

can be different than the queryname. **/

run queryname

rsave queryname

/** The rreplace command will save the report and if there is already

a report there it will overwrite it. **/

run

queryname

rreplace

queryname

/**************************************************

PARAMETER FILES IN A CQ MACRO:

When you create a report that requires parameters to be entered a

“.par” parameter file is required. When running a query and you type

in parameters at your keyboard you are creating this file. You are not

limited just to keyboard entry. I have written scripts that create a

parameter file and then pass that file to a CQ query, others where one

CQ query creates a parameter file to be passed to another query, and

still others which retrieve parameters from non-Array machines to use

as parameter files. In any case, no matter where the parameter file

comes from, here is the syntax to use a parameter file to run a query.

**/

run/parameterfile=filename.par queryname

spool/que=ARGB/overwrite

reportname

spool/que=lpt/overwrite

reportname

/** also note that the same report can be

printed to multiple queues **/

/**********************************************

COLLECTIONS IN A CQ MACRO:

The below report shows a query that uses the “find” type of CQ to

create a collection, which is saved by the “csave” or the “creplace

command. Here is what the complete, one-line query

“find_bpr_det_today.eq“ contains:

find bpr_det with sav_inv:inv_date = todaysdate

and this is how you would use it in a macro to run it and save a

collection.**/

run

find_bpr_det_today

csave bpr_det_today

/** or you could use creplace instead of csave **/

creplace bpr_det_today

/** The below query runs against this (above) subcollection, thereby

speeding multiple references to large files such as the bpr_det file.

The “list” switch in the query will look like this:

list/collection=”bpr_det_today” **/

run/sub=bpr_det_today

queryname

spool/que=lpt/overwrite

queryname

/** If you use the “csave” command to save a collection CQ will not

save another collection if there is already a file with that name there.

You must do one of the following:

Use “creplace” instead:

o creplace bpr_det_today

remove the collection:

o rm bpr_det_today.co bpr_det_today.ch

Have CQ unsave the collection:

o cunsave bpr_det_today

**/

/***************************************************

USING GENREC:

To run a subsequent query against genrec.cq is a cinch. Just save the

first query as genrec.cq. See more info on this topic in the CQ tips

section. ***/

run

queryname1

rreplace

genrec

(or you could use rsave)

run

queryname2

/****************************************************

USING CLI WITHIN A CQ MACRO

You can even access the command line interpreter (CLI) right in the

middle of your macro. Just enter the CLI command and your

command will execute right there. You may be able to sidestep a lot

of scripting with this technique! Here are some useful examples. **/

run

queryname

rreplace reportname

cli mailx –s ”subject” jsmith < reportname.cq (mails)

cli lp –d01p1 reportname.cq

(prints)

cli cp reportname.cq /home/who/genrec.cq

(copies)

cli mv reportname.cq newname.txt

(renames)

cli rm reportname.cq

(removes)

cli echo “\f”

| lp –d01p1

(form feeds)

A Few CQ Tips

Chances are that you have mastered CQ. However, there are a few things

that are especially useful with scripting, and just to make sure you are aware

of them I will review them here.

Using genrec.cq to make multiple passes

You can write a query that creates a correctly formatted report and saves the

file as genrec.cq. You can then run another query against genrec.cq (used as

the domain). This is particularly useful in shell scripting because chances are

that you will run your reports as part of an automated process such as the

nightly jobstream. This means that if you could rename a .cq report as

genrec.cq you could automate multi-passes easily. This is not necessarily a

shell scripting issue since all this can be done in a macro, but the flexibility to

move files into genrec.cq has many broader applications than just CQ. For

example, it is possible to pull data from another computer, a PC for example,

and from a different non-Array application and use CQ to create reports.

It is important to specify the column for each field you include in the report.

Here is an example of a query called open_order_capture.eq that will make a

report for later use as genrec.cq. This same query will be later used as part

of an entire subsystem that demonstrates some practical uses of shell

scripting. Note that the “list” fields are all locked into place with the

“/column” switch, and that the “list” statement produces a flat file –

“LIST/nodefaults/nopageheadings/noreporttotals/duplicates

/pagewidth=255/pagelength=0/nobanner/noheadings”

Here is an example of a query called open_order_capture_compare_3day.eq

that is written to use the genrec.cq file created by the first report.

It is important to note that:

The domain of this query is genrec. (LIST/domain=”genrec”)

The entire genrec record is 255 STRING characters which are

accessed by defining which character is required (example

genrec:whole_rec[1] would access the first character.)

Access to all files (inv_mst, whse_re, etc.) must be explicitly defined.

Any genrec character that is supposed to correspond to a field that is

actually a number (such as whse_re:ware) is defined as the same type

of number as the as the field you are accessing – for example, “define

unsigned binary”. You can tell what kind of number you are looking

at in VCQ (Not VCQ for Windows) and turning on the help window

to see record type.

You can make pass after pass, each time naming the report genrec, but if you

have to do that it is time to reevaluate your technique. There are many ways

to skin a cat…

Creating a zero-length report

Perhaps the most important type of report you can learn to develop is the

report that is always looking to see if a condition has occurred that warrants

intervention. I have hundreds of reports and scripts in my jobstream looking

for conditions that may require some sort of action. Only when some

threshold is exceeded is anyone even aware of them, either because they

receive an email or a report prints. In a sense I have automated the process of

managing the function itself.

This is a very important tool to grasp. It is central to good management both

from both IT and general management perspectives.

Here is the trick: Turn off everything that can print even when the report

does not find anything, such as headers, titles, totals, pageheadings, etc.

Enclose every portion of the query that could report something with an “if”

statement that could only be true if the where statement returns something. If

you are searching whse_re, maybe “if whse_re:ware > 0 then {… “, or if you

were searching ord_det, then “if ord_det:order <> 0 then {…“. Here is an

example of a real query called open_ord_w_frt_lines.eq that only creates a

report when it finds orders that meet the criteria in the where statement.

Of course you could also use the logic “if weekday(todaysdate) = 4 then {…“

to conditionally print reports on certain days of the week. That is fine just so

long as the file you are accessing is a small one – not bpr_det for example.

If a zero-file-size report is printed nothing will print – not even a blank paper.

You can just issue the print command within your macro. A zero-file-size

report CAN be emailed though, so a test must be performed to determine if

the file size is zero before mailing the report. The script would include

something like this:

if test –s file.cq

then

mailx –s “subject” jsmith < file.cq

fi

See the section on using email for more information.

One very important point: If you create reports that only appear when a

condition occurs, long stretches may pass before the recipient of the report

sees it again. Get in the habit of printing the name of the person who should

get the report and the procedure to handle the report on the report itself.

Using email

The power of email as a method to deliver the output from scripts is

astonishing. This section is a basic email toolbox that will cover the

commands and how to use them. There are other examples of using emails in

some of the scripts that are supplied.

Mailx vs mail

The mailx utility is “extended mail”. It is the successor to the mail utility.

For the most part IBM has done an excellent job of integrating the mailx

functions into AIX, so in most cases it won’t make a difference if you use the

mailx vs. the mail command. However, there are occasions where is does

make a difference, so get in the habit of using mailx instead of mail.

email to individual(s) automatically

(All these examples send the text in the BODY of the email message.)

To email a file named file.txt to an individual local user named jsmith use

this command:

$ mailx –s “subject here” jsmith < file.txt

To send to several local user and one non-local user:

$ mailx –s “subject” jsmith jjones name@somewhereelse.com <

file.txt

To send directly from the command line or from within a script:

$ echo “

Dear Joe,

Wish you were here.” | mailx –s “subject”

jsmith

To send to jsmith and copy to jjones with blind copy to fred:

$ mailx -c jjones –b fred –s “subject” jsmith < file.txt

To send to all the users in the file called mail_list.txt:

$ mailx –s “subject” `cat mail_list.txt` < file.txt

Note this command uses “back quotes” sometimes called

“grave quotes” to issue the cat mail_list.txt command – they

are NOT single quotes.

To send a mail with a very long subject:

$ subj=”Did you feel you had to have a really long subject?”

$ mailx –s “$subj” jsmith < file.txt

To send the standard screen output of any utility to jsmith:

$ who –u | sort | mailx –s “subject” jsmith

To send the errors only from script.sh to jsmith:

$ script.sh 2> errors.txt ; mailx –s “subject” jsmith < errors.txt

To send the standard screen output only to jsmith:

$ script.sh 1> stdout.txt ; mailx –s “subject” jsmith < stdout.txt

Note: there is more than one way to do the above two examples… See the

section on redirection in UNIX shell scripting basics.

email attachments automatically

To email a file.txt to jsmith as an attachment called file2.tab:

$ uuencode file.txt file2.tab | mailx –s “subject” jsmith

To send a tab-delimited attachment file created by a CQ query like this one

and have it arrive as an Excel file attachment:

$ uuencode reportname.cq reportname.xls | mailx –s “subject”

jsmith

NOTE: There is now a better way to do this. Write a regular query and

include the /dif switch after the “list” part. Then use this syntax:

$ uuencode reportname.dif reportname.xls | mailx –s “subject”

jsmith

To send an attachment to jsmith with the contents of the file mailtext.txt in

the BODY:

$ (cat mailtext.txt; uuencode file.txt file2.txt ) | mailx –s “subject”

jsmith

To send an attachment using the echo utility to provide BODY of message:

$ (echo “

Dear Array User,

Body of message can go here, and

can span multiple lines. The echo portion

will not close until the closing double quotes

appear.

” ; uuencode file.txt file2.txt ) | mailx –s “subject” jsmith

The output of any commands you put between the parentheses will be sent in

the mail This allows you to send more than one attachment and text in the

body of the email Separate your commands with a semi-colon For example.

$ (echo “

Hello,

I just wanted to show off my new skills

by sending this email to you.

”; uuencode file.txt file2.txt; uuencode another_file.txt

another_file2.txt) | mailx –s “subject” jsmith

email using .forward files

At this company we spent a huge amount of time setting up and configuring

UNIX sendmail, multiple MS Exchange servers, email clients, etc. It was

one of the biggest and most complex IT projects ever undertaken here. It is a

wonder that anybody ever gets any email at all. However, a lot of it is overridden by the .forward file in the user’s home directory. This is a great way

to simplify a lot of the headaches of setting up email and perhaps even DNS

issues.

All that needs to happen to make a .forward file work is to make a file for this

user that contains the email address that you want all system email to go to.

So if the user jsmith is an outside salesman and also has an account at home

called “bigdaddy@aol.com” that he wants to receive email as, go to the

/home/jsmith directory and create the file called .forward that contains only

the final destination email address.

Root needs to be the owner of this file, so you should probably do the below

logged in as root You can do it all in one shot:

$ echo “bigdaddy@aol.com” > /home/jsmith/.forward

You may be able to sidestep a lot of email and DNS issues by entering a

.forward file for each local user. If your company’s domain is

“mycompany” and you normally access a local user as jsmith, you may want

to put the jsmith@mycompany.com into a .forward file for this person. You

will still be able to access him locally as jsmith, but in fact the Internet email

address will be where he gets his email. You may also need to modify your

/etc/hosts/ file to define the IP address of “mycompany”. Solutions to weird

address resolutions may also be discovered if you use the IP address of the

target server “jsmith@172.16.1.254” for example. Whatever works for

you…

There is a problem with the way that TSS runs their nightly

/tss/macros/rootclean.sh script. It changes ownership of all files in the entire

home directory to prog. If root does not own the .forward file it will not

work. On my system I have modified the rootclean.sh script to include the

line /tss/macros/forward_file_fixer.sh at the end of the file.

This is what the script looks like:

#! /bin/ksh

########################################################

# forward_file_fixer.sh

#

# change owner to root of any .forward files in all home directories

########################################################

# change to /home directory

cd /home

# start a “for” loop and assign the variable i (item) to the output of the ls

(list) command

# then test to see if there is a file named .forward in each home directory.

# (you can see more about “test” in the test section of UNIX basics)

for i in `ls`

do

if test –f /home/$i/.forward

then

chown root /home/$i/.forward

fi

done

If you are having a problem with root files not working correctly because of

ownership issues and you feel some discomfort with putting this script in

your rootclean script by yourself then contact TSS support to have them do

this for you. They are aware that their practice of reassigning owners is a

little too inclusive in occasions like this and they will be happy to take care of

it. If you get someone who doesn’t seem to understand the issue then refer

them to this document.

email /etc/alias file

The idea that one can use an alias to email to a “real” user is wonderful. It is

especially good with shell scripting, because in many cases the same

recipient will appear in many different scripts. So instead of defining an

exact person to take care of scripts that are intended by the person who is

concerned about Sales Orders, I can create an alias called ORDERADMIN

that will receive any email directed toward this function. When this person

goes on vacation all I have to do is to change the name in the /etc/aliases file,

not all my scripts.

The process of creating an alias is simple:

1. logged in as root, vi the /etc/aliases file

2. here are the vi commands again just in case you need them

3. edit and save your file

4. Type this command:

# sendmail –bi

Here are some examples:

(I am showing these aliases in caps, but they don’t have to be)

An alias can be an individual

ORDERADMIN: jsmith

An alias can be a list of local and/or remote users

MANAGERS:

jjones, sbrown, pwhite, doofus@aol.com

They can also include other aliases

WHSE1MGR:

jblow

WHSE1CREW:

jbaker, jsmith

WHSE1ALL:

WHSE1MGR, WHSE1CREW

They can dump the mail without saving it

autojs:

/dev/null

They can include print queues where reports might be printed.

The application when you use an alias to print against is when

you are e-mailing others with the same report, but you also want to print to

paper.

LPT:

" | lp -dlpt"

ARGB:

" | lp -dARGB"

ARPP:

" | lp -dARPP"

MFGB:

" | lp -dMFGB"

MFPP:

" | lp -dMFPP"

Notes:

1. You may want to alias all of your inside and outside sales

person’s names to the salesman ID as they appear in the

ord_hdr:in_slm or ord_hdr:out_slm fields. When we examine

how to email a specially formatted CQ report you will be able

to automate the email process that parses through the report

and determines who to send to – but the aliases have to be

there in order to work.

2. There is one email address that appears in all the example

scripts included with this document that you will want to

create: CQADMIN. There are others too, so keep an eye out

for them as you review the script examples.

email zero file size

In the CQ Tips section we reviewed how to make a CQ report with a zero file

size. You will recall that if a zero-file-size report is printed nothing will print

– not even a blank paper. You can just issue the print command within your

macro. A zero-file-size report CAN be emailed though, so a test must be

performed to determine if the file size is zero before mailing the report.

The script would include something like this:

if test –s file.cq

then

mailx –s “subject” jsmith < file.cq

fi

The “test –s” portion means: Test this file to see if it has a size > 0. If it

does, then mailx…

The very same command can be written as

if [ -s file.cq ]

then

mailx –s “subject” jsmith < file.cq

fi

If you use this method, then remember that there must be spaces on either

side of the “[“ and “]” brackets.

The “if” statement in a shell script is pretty much like CQ “if” statements,

except that it has to end in “fi” (if spelled backwards).

email using a script from a parsed CQ report

First of all, the definition of parse is to separate something into the separate

elements that make up that thing. For example, we could parse this sentence

into individual words. In the computer world, after a program has run

through a file and separated the elements, the file is considered parsed.

Because this is a little more complex than some of the other tools in this

toolbox, at the close of this section we will have created a complete

application from start to finish. This application will run once every night

and send each salesperson an email with a recap of their quotes that were

entered that day. Furthermore, if a salesman is hired, fired, or killed by

someone in the IT department, all that needs to change is the /etc/alias file.

There will be no changes to the code required. This is also an extremely time

and computing resource efficient method.

Step 1. Create the Query

This query, called quote_outsales_parse.eq, will create a single report that we

will then use with a UNIX script to sort and separate (parse) the data on the

report, and then, for each parsed element send the output in the body of an

email to the correct recipient.

This is the output of what the above query will look like:

002

002

002

002

002

002

002

002

002

002

002

002

002

002

005

005

005

005

005

005

005

005

005

005

005

005

005

QUOTES FOR YOUR CUSTOMERS ENTERED ON: 09/08/2000

-----------------------------------------------Summary by product line. For item-level details see Array.

QUOTE CUSTOMER_NAME

DOLLARS

SLM

-----------------------------------------------------------------------649038 MILWAUKEE WIDGET MFG INC

po:

DGM

200 APPLETON FITTINGS

21

555 REGAL FITTINGS

22

via:

Total:

43

End of report for this outside salesperson

QUOTES FOR YOUR CUSTOMERS ENTERED ON: 09/08/2000

-----------------------------------------------Summary by product line. For item-level details see Array.

QUOTE CUSTOMER_NAME

DOLLARS

SLM

-----------------------------------------------------------------------649075 BIG BUSINESS EQUIPMENT CORP

po:

SEM

290 HOFFMAN

435

via: OTME (MILW EAST)

Total:

435

End of report for this outside salesperson

You can see that down the left-hand column there are two different numbers repeated, “002”,

and “005”. These are the outside salesman numbers for quotes that were entered today. (We

only have two quotes, so I guess we weren’t too busy today.) Our objective for this report is to

parse this report using the left-hand column and send to that alias. Note that each section of the

report has it’s own header.

Step 2. Alias the parse target file.

The first thing to do is to make sure that all our salespeople are set up with aliases in the

/etc/aliases file. If you haven’t done this yet then stop and do it. Once you have this process

licked you will definitely want to repeat this with other scripts you write. See the using

/etc/aliases section if you need a refresher.

Step 3. Create a CQ Macro

Here is the macro named quote_outsales.mf. I prefer to name the report the same as the query,

it kind of keeps everything together.

/*** quote_outsales.mf

***/

run

quotes_outsales_parse

rreplace

quotes_outsales_parse

Step 4. Create a shell script.

You may need to refer back to the “how to create a shell script” section.

Let’s jump right in and look at the script. Here is a version without a bunch of comments. I will

repeat each line again to explain each detail. Before we go on, I need to credit the original

author of this script – Mike Swift of North Coast. I have made some modifications to the one he

supplied, but if you feel overwhelmingly driven to send any royalty checks, please send them to

him. (I have just numbered these for later reference – don’t number yours.)

1.

2.

3.

4.

5.

6.

7.

8.

9.

10.

11.

12.

13.

14.

15.

16.

17.

18.

19.

20.

21.

#! /bin/ksh

##################### BEGIN #############################

#

quote_outsales.sh

#

#

send short version of today’s quotes to out_slm aliases

#

PATH=$PATH:/cqcs:/cqcs/programs:/data13/live:/cqcs/cqjobstream; export PATH

cd /cqcs/jobstream

cq quote_outsales.mf > mferror

cqerr.sh

cat quote_outsales_parse.cq | cut –c1-4 | uniq > quote_outsales_list.txt

exec < quote_outsales_list.txt

while read salescode

do

grep "^$salescode" quote_outsales_parse.cq >> tempqt.txt

subject="Today's Quotes for Slm $salescode"

if test -s tempqt.txt

22.

then

23.

mailx -s "$subject" $salescode < tempqt.txt

24.

fi

25.

rm tempqt.txt

26.

done

27. exec <&28.

29. #################### END ##########################

Before we start, notice that there is some strategic indentation here. Hopefully using CQ has

taught you how to be neat, because shell scripting is many times more difficult to decipher.

We already covered the first part (lines 1-9) of this script in the section regarding PATH, so the

first two lines for us to look at are 10 & 11:

10. cq quote_outsales.mf > mferror

11. cqerr.sh

The “cq” command invokes the “cq” program in /cqcs/programs to start the CQ macro. The “>

mferror” portion of this line and the “cqerr.sh” on the next line are used to capture and email

errors, and since these are handled in the CQ macro error section of this document we will skip

over these for now.

13. cat quote_outsales_parse.cq | cut –c1-4 | uniq > quote_outsales_list.txt

This line concatenates (cat) the file that was created in the cq macro, pipes the output to the “cut”

command, which cuts out the first four characters, and then pipes the output to the uniq utility,

which finally redirects the output to a file called quote_outsales_list.txt.. If we were to look in

this file at the end of processing this line we would see two lines that would look like this.

$ cat quote_outsales_list.txt

002

005

Well, will you look at that; we have managed to come up with the parsing target list!

15. exec < quote_outsales_list.txt

The “exec” utility executes the file that follows. The “<” character “re-directs the output of the

file to the exec utility. In other words, this command opens the file quote_outsales_list.txt for

processing. (Which we know is actually our parsing target list.) There is one important feature

of the exec utility worth mentioning, and that is that invoking a process with the exec utility will

not spawn a sub shell to execute within. (Not too important here, but remember it for when you

suspect you have a problem with execution in a sub shell.)

17. while read salescode

This line has three components: The word “while” begins a loop, which will continue to

evaluate to true until the conditions that follow next are completed. In other words, until there

are no more salescodes to read, the while loop will run. The word “read” is used to assign the

next element of the parsing target list to the salescode variable. “While” loops are not used as

often as “for” loops. As a matter of fact this script could have been written with the “for” loop

just as well, but this one works well, so we will stick with it. A while loop consists of four parts:

The “while” part, the “do” command, the command(s) that the “do” is supposed to execute, and

the “done”. For each salescode, the loop will run through the “do” commands until it hits the

“done”. When there are no more salescodes for “while” to “read”. Then the script can proceed

past the “done” portion. There is more about loops in the UNIX basic section.

19.

grep "^$salescode" quote_outsales_parse.cq >> tempqt.txt

This section uses the grep utility to look back through the original cq report. The grep utility will

return each entire line whenever it finds an instance where the salescode variable is found. In

order to make sure that the grep utility only looks at codes that appear on the beginning of the

line, we enclose it in double quotes and put the “^” caret symbol in front of it. The “^” symbol is

used this way in many other UNIX utilities too. The next thing to look at is the $salescode.

You will recall that this variable was assigned back on the “while read salescode” line. Any

word that follows the “read” will be interpreted as a variable. When a variable is assigned, you

do not use the “$” in front. When you refer to a variable after it has been assigned then you use

the “$” in front. So if we were to have put a command before the “done” line that said

something like: echo $salescode the first time the loop was executed the $salescode would

have been 002. The next time it would have been 005. The “>>” command redirects the output

to the file called tempqt.txt, but instead of overwriting the file every time, it appends the new line

to whatever is already in the file.

20. subject="Today's Quotes for Slm $salescode"

This line assigns “subject” as a variable for later use with the mail command. All it takes to

assign a variable is the “=” sign (you also saw the “read” command do it.) Note that it has been

assigned AFTER the $salescode variable has been determined in the while loop. If you feel like

a little self-education, try moving this line around to different parts of the script to see how

variable assignments work.

21.

22.

23.

24.

if test -s tempqt.txt

then

mailx -s "$subject" $salescode < tempqt.txt

fi

The UNIX shell “if” construct is pretty easy to understand because it is pretty much like CQ –

the most obvious difference being the “fi” (backwards if) that closes the if statement. We also

saw this example in the mailing section. The “test –s” statement checks to see if the file

tempqt.txt has a file size greater than zero. There is more on this construct in both the if and the

test sections. If it does have a file size greater than zero, then the statement is true, and the

“then” portion occurs. You may wonder why the test for file size is required. Actually, if

everything is perfect, it does not need to be there. But if I forget to add a new salesperson, or

somehow I screw up the next version of the query, there may be something in the parse target

file that cannot be found when the script greps through the report – which will result in a file size

of zero. Perhaps an enhancement to this script would be to send an email to the system

administrator if this occurs, but I will leave that for you. Also occurring on this line, the

$subject variable is used to assign a subject to the email, and the recipient is the $salescode

variable.

25.

rm tempqt.txt

This line removes the temporary file so that it is clear for the next iteration of the while loop.

26.

done

If there are more $salescodes, the script returns to the “while” line, otherwise this line closes the

while loop when there are no more $salescodes to read from.

27.

exec <&-

This line closes the exec utility, and the script exits.

Step 5. Copy all this into the /cqcs/jobstream directory

Assuming you have made the necessary changes to the alias file for CQADMIN and the outside

salesmen, then these are the pieces of the puzzle you will need to make this all work:

The quote_outsales_parse.eq CQ query

The cqerr.sh error-capturing UNIX shell script.

The quote_outsales.mf CQ macro

The quote_outsales.sh UNIX shell script.

(don’t forget to make the shell scripts executable!)

Once you have the quote_outsales.sh working correctly, then you can modify your

/tss/macros/CQJOB.SH script, but test first. The instructions on modifying your CQJOB.SH

script are in a separate section, as well as details regarding error capture. You should understand

both before installing these scripts permanently on your system.

UNIX Shell Scripting Basics

This section is not intended to be a complete listing of what you need to know to effectively

write shell scripts. I only intend to gloss over the few things that I believe are most important.

You will need some sort of reference book at your side, and a good, 1,000 page book is

referenced here, so don’t be discouraged if this document doesn’t go into the detail you need…

redirection

The “|” operator. (pipe)

The pipe command is used to fasten the output of the first command to the second

command. For example, the command

$ who –u | sort | grep tty | pg

will take all the output of who –u, pass it to the sort utility, which will put it in order by

the first field, then pass it to the grep utility, which will search for the characters, “tty” on

each line, and the output will finally be passed to the pg utility, which will be presented

to you one screen at a time.

The “>” operator.

When a utility is executed, the shell already knows what to do with the output. Example:

$ echo “Echo sends to standard output, meaning your screen.”

Echo sends to standard output, meaning your screen.

Echo’s output isn’t just always going to go your screen. You can control what echo does

with its output via redirection. For example:

$ echo “Anything that I want to type.” > newfile.txt

$ cat newfile.txt

Anything that I want to type.

$ echo “Type something else.” > newfile.txt

$ cat newfile.txt

Type something else.

From the above examples you can see that if newfile.txt did not exist already, then the

system created it. If it already did exist then the new output from echo overwrites the file.

Standard errors from a shell script can be sent to a file instead of your screen too:

$ script.sh 2> scripterrors.txt

Standard error and standard output can even g to can even go to different files:

$ script.sh 2> scripterrors.txt 1> what_should_go_to_screen.txt

The “>>” operator.

If you want to append new text to a file you can concatenate the additional data using the

“>>” command like this:

$ echo “Let’s try this again.” >> newfile.txt

$ cat newfile.txt

Type something else.

Let’s try this again.

The “<” operator.

This command will pass an existing file to a utility named first. For example, these two

commands accomplish the same thing:

$ cat newfile.txt | mailx –s “subject” jsmith

$ mailx –s “subject” jsmith < newfile.txt

The difference is that the first example required both the “cat” and “mailx” utilities, but the

second example the file was efficiently passed to the mailx utility by the shell in a single

operation. There are certain commands that are best executed this way.

The tee utility.

The tee utility is kind of like the “tee” fitting a plumber might install in a pipe, and is always

part of a “piped” expression, so it is well named. This command will send the output of a

command to two places at once – one to your screen, and one to a file.

$ who –u | tee whofile.txt

Note: This is especially useful when troubleshooting a script:

$ ksh –x testscript.sh | tee what_in_the_the_hell_is_wrong.txt

quotation marks

The section deserves more study than I am going to devote to it, but there are books that can do

the job, so here is a very, very brief look at quoting. Shell scripting uses punctuation

extensively.

double quotes (“”)

Uses:

Keeps strings together

$ echo “two words” > can_we_find_this_again.txt

$ grep two words *

grep: 0652-033 Cannot open words.

Try it again with double quotes:

$ grep “two words” *

can_we_find_this_again.txt: two words

Allows variables to be interpreted inside them, but otherwise turns off most

symbolic character interpretation. What is not turned off are these symbols: ‘ ‘ $

\ (Use the backslash to prevent interpretation of symbolic characters. )

$ echo “My name is $LOGNAME”

My name is jsmith

$ echo “My name is \$LOGNAME”

My name is $LOGNAME

If you start with a double quote, the system tries to match it to another. The echo

command will not execute until it sees another double quote for example:

$ echo “

> I can type a whole paragraph if I feel

> like it. ”

I can type a whole paragraph if I feel

like it.

Single quotes (‘’)

Uses:

Keeps all special symbols from being interpreted as metacharacters.

$ echo ‘$LOGNAME’

$LOGNAME

Back quotes (``) (also called “grave” quotes)

Big advantage is:

Will cause the shell to execute the enclosed as a command.

$ mailx –s “subject”

`cat maillist.txt` < file.txt

variables

UNIX makes it ridiculously easy to assign a variable. All you need is the “=” sign, the “read”

command, or to execute one of the loops. From then on you just refer to it preceded by the $

sign. Here are some scripts and what they look like when executed.

Using the “=” sign:

$ cat assign_var.sh

myvariable=amazing

echo $myvariable

$ assign_var.sh

amazing

Using “read”:

$ cat assign_read_var.sh

echo “Enter something and hit enter:”

read whatevername

echo $whatevername

$assign_read_var.sh

Enter something and hit enter:

Life is good!

Life is good!

Using a loop:

(more on loops in the loop section.)

$ cat test_loop.sh

for myvariable in a b c

do

echo “the value of myvariable for this element is: $myvariable”

done

$ test_loop.sh

the value of myvariable for this element is: a

the value of myvariable for this element is: b

the value of myvariable for this element is: c

Loops (for loop, while loop, until loop)

There are three types of loops: The for loop, the while loop, and the until loop. What happens is

that your script assigns a variable to a single-dimension array (such as the elements of a file) or a

list, and for each element in that array will perform the actions specified between the “do” and

the “done” commands

The for loop is used the most and is easiest to write and understand.

The array can be entered directly at the command line like this:

$ for i in itemfile.txt

> do

> echo $i

> done

(Note: i is often used to assign the target variable, but the variable can be

named anything you want. The “i” originally meant item, and stems from

the days when every character was used conservatively to save memory.)

Or as listed directly as part of the script like this

for myvariable in “a b c”

do

echo “the value of myvariable for this element is: $myvariable”

done

Or it can be part of a file:

for i in file.txt

do

echo “the value of i is $i "

done

Or it can be the output of a command:

for anyname in `ls`

do

echo “This is a file in the current directory: $anyname”

done

(Note: that those are grave quotes or back quotes surrounding the ls

command. This tells the shell to execute the enclosed as a command, and

put the output on the command line.)

Be aware that the for loop executes for each word in a file!

The while loop

Just like the for loop the while loop continues to execute the loop until there are no more

elements in the target array. However, the big difference is that there is a test that occurs

(usually) and the outcome of that test determines if the looping portion executes. What makes

this useful is that you can keep this loop running.

For example, this loop (in this case entered directly on the command line) will run until the value

of the quit variable is no longer equal to “n”

$ quit="n"

$ while [ $quit = "n" ]

> do

> echo " do you want to quit?"

> read quit

> done

do you want to quit?

n

do you want to quit?

y

$

This loop will run forever and NEVER stop:

while :

# the colon (:) means nothing, so it is ALWAYS true.

do

echo “This is the song that never ends”

done

While loops are useful when you are reading from user entry or you are feeding information to

the loop from some other source, such as a logfile. These loops run until the condition becomes

not true.

(In this case you would have to kill this process by using the kill command. You would have to

find the process number first:

$ ps –ef | grep scriptname

Don’t be afraid of continuous loops, just think first.)

The until loop

The until loop is the reverse of the while loop. It does not begin to execute until the condition

becomes untrue. The big value of until loops is to start something that loops until it senses that

another event has occurred and then progresses to the next part of the program beyond the loop.

These loops frequently sleep until the condition occurs. For example, if I want to run this script

to warn jsmith that jjones is back from lunch:

until who | grep jjones > /dev/null

do

sleep 60

done

grep jjones > message

mailx –s “jjones is back” jsmith < message

rm message

An example of the reverse syntax between the while loop and the until loop is the way you might

write the test statement for a script that presents a menu.

For example, the below two scripts are functionally identical:

A while loop would look like this:

leavechoice=”n”

read leavechoice

while [ $leavechoice = “n” ]

do

(commands)

done

An until loop would look this:

leavechoice=”n”

read leavechoice

until [ $leavechoice != “n” ]

# “!=” means “NOT equal”

do

(commands)

done

Testing for conditions

Performing a test on a command or a file and then conditionally executing a function is one of

the most important aspects of shell scripting. There are several ways to write the test command.

Because we are using CQ that may produce a zero file size, this may be your most used

command.

if test –s file.cq

then

echo “ the filesize of file.cq is greater than zero”

fi

Is the same thing as

if [ -s file.cq ]

then

echo “ the filesize of file.cq is greater than zero”

fi

Note that there are spaces on either side of the “[“ and “]” brackets. It won’t work without them.

(If you are using the Korn shell there are commands other than these, but I will omit them here

for simplicity’s sake. You can read more about these in your man pages.)

You may also do a fair amount of testing to see if the file is there at all, which is the “-f” option.

You can compare strings

if [ $name = “John” ]

You can use math

if [ $variable –ge 66 ]

(“-ge” greater than or equal. The shell knows if the variable is a string, or

number by the context in which it is used, but if you have a number you may want

to force it be a number be performing a math operation of adding zero to the

variable before you evaluate it. )

And you can string together operators:

if [ $day = “Monday” -a $variable= “yes” –o

( -a is “and”, -o is “or”, -lt is “less than”)

$number –lt 20 ]

And you can make something logically negative (like NOT) with the exclamation point.

if [ ! $day=“Monday” ]

if [ ! -s file.cq ]

You can determine ranges

if [ $count -gt 10 -a

$count

-lt 20 ]

You can use parentheses, but you must use the backslash to keep the shell from interpreting them

as metacharacters.

if [ \( $count -gt 10 -a $count -lt 20 \) -a \($count2 -gt 15 -a $count2 -ge 25 \) ]

Test Utility Operator Table

Here is a list of string operators for the test utiltity:

string1=string2 string1 is equal to string2

string1 != string2 string1 is not equal to string2

string

is not null

-n string

string is not null (and test process can get at string)

-z string

string is null

(and test process can get at string)

Note: Enclose strings in double or single quotes to prevent the shell from interpreting

the contents of your variable as containing metacharacters or assuming the variable is

an integer.

Here is a list of integer operators for the test utiltity:

integer1 –eq integer2

integer1 is equal to integer2

integer1 –ge integer2

integer1 is greater than or equal to integer2 integer1 –gt

integer2

integer1 is greater than integer2

integer1 –le integer2

integer1 is less than or equal to integer2

integer1 –lt integer2

integer1 is less than integer2

integer1 -ne integer2

integer1 is not equal to integer2

Note: To keep the shell from interpreting the contents of your variable as a string instead

of an integer, add zero to it. Another trick is to assign an integer to the variable when the

script is started, and then when you reassign the contents of that variable it will already

have been interpreted as an integer.

Here is a list of file operators for the test utiltity:

-d file

file is a directory

-f file

file is an ordinary file

-r file

file is readable by test process

-s file

file size is greater than zero

-w file

file is writable by test process

-x file

file is executable

READ YOUR MAN PAGES ON THE TEST COMMAND. If you don’t have a book then print

the test man page and keep it handy. You can print it with this command, just choose what

printer if not lpt

$ man test | lp –dlpt

The if, then, else, elif and case expressions

We have demonstrated numerous if statements, but here are a few examples again. If you

haven’t read the test section then read it.

if [ –s file.cq ]

then

echo “filesize is zero”

else

echo “filesize is NOT zero”

fi

If statements are closed with “fi”(backwards if)

Let’s write the same script three different ways. These scripts will determine what grade to give

students while demonstrating each of the three major decision making methods:

the classic “else/if”

the “elif”

the “case” method.

The below script uses the separate “else” and “if” construct in a nested style. This is usually

done in a nested style that line up each set of “if”, “then”, and “else”, but any indentation is up to

you of course.

if [ $grade –gt 90 ]

then

echo “$grade gets an A grade”

else

if [ $grade -ge 80 ]

then

echo “$grade gets a B grade

else

if [ $grade -ge 70 ]

then

echo “$grade gets a C grade”

else

if [ $grade -ge 60 ]

then

echo “$grade gets a D grade”

else

if [ $grade –lt 60 ]

then

echo “$grade gets an F”

fi

You will note that this is very similar to CQ. You can also see how the elif works (“else if”).

There is no benefit to choosing one style over another.

if [ $grade –gt 90 ]

then

echo “$grade gets an A grade”

elif [ $grade -ge 80 ]

then

echo “$grade gets a B grade

elif [ $grade -ge 70 ]

then

echo “$grade gets a C grade”

elif [ $grade -ge 60 ]

then

echo “$grade gets a D grade”

elif [ $grade –lt 60 ]

then

echo “$grade gets a F grade”

fi

Again it must be closed with a “fi”.

The case statement would probably be better than lots of “elses” or lots of “elif”s.

Here is an example of using a case statement to perform the same function as the previous

program.

case $grade

in

[90-100]

) echo “$grade gets an A” ;;

[80-89]

) echo “$grade gets a B”

;;

[70-79]

) echo “$grade gets a C”

;;

[60-69]

) echo “$grade gets a D”

;;

[01-59]

) echo “$grade gets a F”

;;

esac

Yep, “esac” is case spelled backward and closes the case statement. You can put lots of

commands after each element, just so long as the last one has a double semicolon (;;) after it.

One of the most common uses of the case statement is to present a menu option to the user.

clear

echo “

SAMPLE MENU CHOICES

1) Show your current working directory.

2) Show who else is logged on.

3) Show today’s date and time.

4) Run a CQ macro

x) Exit

Make a choice: \c”

read choice

case $choice

in

1) pwd

2) who –u | sort | pg

3) date

4) cq cqmacro.mf

x) exit

*) echo “Invalid choice \c”

esac

;;

;;

;;

;;

;;

;;

(Note: This is the kind of thing that you would want to execute entirely in a

while loop, or an until loop.

Put the whole above script above in this script

while :

# remember “:” is always true

do

Put the entire script above in here.

The menu will continue to be presented

to the user until the “x” choice is made.

done

exit

There is more on this kind of thing in the recommended books. There is more

on loops in the loop section.)

Of course these scripts could also be used to execute a cq macro file, but there is no need to

reinvent the wheel. If you have not yet taken advantage of the CQMENU scripts Bryan

McVeigh has provided for FREE, then don’t bother to write your own to perform CQ macro

executions though – see www.tradeservice.com to download. (Bryan has some other cool things

on that site too.)

Controlling errors

Before we can ever seriously consider automating our scripts we have to make sure that we can

turn our back on them with confidence that we will be able to know that something went wrong

and what the problem might be. This also goes for CQ macros. If you find out that your script

or macro failed because somebody calls and says they did not get a report you are going to waste

a lot of time at the minimum, and probably not know where to begin to fix the problem. If

something fails in any of my scripts or macros, the system tells me via email what went wrong

and exactly what the problem is.

There are two different processes to use – one for catching failed CQ macros, and one to catch

errors in UNIX scripts.

Catching UNIX shell script errors

The UNIX shell is absolutely wonderful about telling you that it encountered a problem. But

like most things in UNIX it is up to you to make it work.

Whenever a script is opened there are three special files opened:

file 0, stdin reads from standard input

(file 0 is usually keyboard input)

file 1, stdout sends data to standard output

(file 1 is usually screen output)

file 2, stderr sends just errors to standard output

(file 2 is usually screen output)

So since we know that the errors will be caught in file 2, we know that we need to direct the

errors somewhere to examine it. What we need to do is use either the “>” redirection command

or the “tee” utility to control the standard error. (More details in the redirection section of

UNIX basics.)

When a script is executed normally the standard error is sent to the screen:

$ script.sh

When standard error is redirected it can be put into a file.

$ script.sh 2> script_errors.sh

If no errors occur the file is still created, but with a zero file size. That means that we can

perform this test after every script call where standard error is redirected.

filename=script_errors.sh

if [ -s $filename ]

then

mailx –s “Script error with $filename” CQADMIN < $filename

fi

If you name the error file the same as the script name but with the “_errors.txt” after it you can

make this easy to type in on every line. Or, if you want to automate the whole thing, here is a

loop that you can just run once and pick up any error file created in this manner. You will get a

separate email for each failure.

cd /cqcs/cqjobstream

for filename in `ls *_errors.txt > target_err_file.txt`

do

if [ -s $filename ]

then

mailx –s “Script error with $filename” CQADMIN < $filename

fi

Checking for errors on the previous command.

Catching an error within a script is also quite easy. Whenever a command executes there is a

status of that command produced by the shell. You can check and see what it was with the $?

shell variable. If everything worked like it was supposed to it will have an exit status of zero.

You can check this from the command line. This command fails:

$ ls zzzzz*

ls: 0653-341 The file zzzzz* does not exist.

$ echo $?

2

Be careful though, a zero exit status means that the command worked correctly, not that it

produced what you wanted!

Catching CQ macro errors.

If a CQ macro fails, it exits at that point and nothing beyond that point is executed. Of course

CQ politely tells you about it, but unless you are sitting there looking at the screen and can read

at the speed that the system can stream data then you won’t see the error.

What we need to do is use either the “>” redirection command or the “tee” utility to control the

standard output. (More details in the redirection section of UNIX basics.)

If the macro is executed like this, all information including any messages CQ might send

regarding errors is sent to the screen: