Learning Functions and Neural Networks II

advertisement

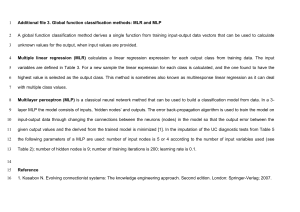

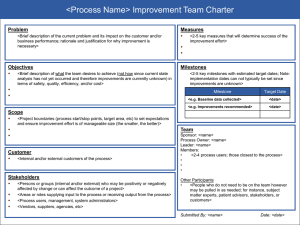

24-787 Lecture 9 Learning Functions and Neural Networks II Luoting Fu Spring 2012 Previous lecture Physiological basis Perceptron Input 0 Wb Input 1 X0 X1 W0 W1 + fH fH(x) Applications x Demos Output Y Y = u(W0X0 + W1X1 + Wb) Δ Wi = η (Y0-Y) Xi 2 In this lecture • Multilayer perceptron (MLP) – Representation – Feed forward – Back-propagation • Break • Case studies • Milestones & forefront 2 3 Perceptron A 400-26 perceptron A B C D ⋮ Z 4 © Springer XOR Exclusive OR 5 Root cause Consider a 2-1 perceptron, 𝑦 = 𝜎 𝑤1 𝑥1 + 𝑤2 𝑥2 + 𝑤0 Let 𝑦 = 0.5, Wb Input 0 Input 1 W0 W1 + fH(x) Output 𝑤1 𝑥1 + 𝑤2 𝑥2 = 𝜎 −1 0.5 − 𝑤0 = const 6 A single perceptron is limited to learning linearly separable cases. Minsky M. L. and Papert S. A. 1969. Perceptrons. Cambridge, MA: MIT Press. 7 8 An MLP can learn any continuous function. Cybenko., G. (1989) "Approximations by superpositions of sigmoidal functions", Mathematics of Control, Signals, and Systems, 2 (4), 303-314 A single perceptron is limited to learning linearly separable cases (linear function). 9 How’s that relevant? Function approximation ∈ Intelligence The road ahead Speed Bearing Waveform Wheel turn Pedal depression Words Regression Recognition 10 11 0 12 1 13 2 ℎ = tanh(𝑎 14 3 15 3 16 ∞ 17 18 Matrix representation 𝐷 𝑥∈ℝ 1 𝑀×𝐷 𝑤 ∈ℝ 𝑧 = ℎ(𝑤 1 𝑥 ∈ ℝ𝑀 2 𝐾×𝑀 𝑤 ∈ℝ 𝑦 = 𝜎(𝑤 2 𝑧 ∈ ℝ𝐾 19 Knowledge learned by an MLP is encoded in its layers of weights. 20 What does it learn? • Decision boundary perspective 21 What does it learn? • Highly non-linear decision boundaries 22 What does it learn? • Real world decision boundaries 23 An MLP can learn any continuous function. Cybenko., G. (1989) "Approximations by superpositions of sigmoidal functions", Mathematics of Control, Signals, and Systems, 2 (4), 303-314 Think Fourier. 24 What does it learn? • Weight perspective An 64-M-3 MLP 𝑥 ∈ ℝ𝐷 𝑤 1 ∈ ℝ𝑀×𝐷 𝑧 = ℎ(𝑤 1 𝑥 ∈ ℝ𝑀 𝑤 2 ∈ ℝ𝐾×𝑀 𝑦 = 𝜎(𝑤 2 𝑧 ∈ ℝ𝐾 25 How does it learn? • From examples 0 1 2 3 4 5 6 7 8 9 Polar bear Not a polar bear • By back propagation 26 Back propagation 27 Gradient descent “epoch” 28 29 Back propagation 30 Back propagation • Steps Think about this: What happens when you train a 10-layer MLP? 31 Learning curve error Overfitting and cross-validation 32 Break 33 Design considerations • • • • • • • • • Learning task X - input Y - output D M K #layers Training epochs Training data – # – Source 34 Case study 1: digit recognition An 768-1000-10 MLP 28 28 35 Case study 1: digit recognition 36 Milestones: a race to 100% accuracy on MNIST 37 Milestones: a race to 100% accuracy on MNIST CLASSIFIER ERROR RATE (%) Perceptron 12.0 LeCun et al. 1998 2-layer NN, 1000 hidden units 4.5 LeCun et al. 1998 5-layer Convolutional net 0.95 LeCun et al. 1998 5-layer Convolutional net 0.4 Simard et al. 2003 6-layer NN 784-2500-2000-15001000-500-10 (on GPU) 0.35 Ciresan et al. 2010 Reported by See full list at http://yann.lecun.com/exdb/mnist/ 38 Milestones: a race to 100% accuracy on MNIST 39 Milestones: a race to 100% accuracy on MNIST 40 Case study 2: sketch recognition 41 Case study 2: sketch recognition • Convolutional neural network Scope Transf. Fun. Gain Sum Sine wave … Or Convolution Sub-sampling Product Matrices Element of a vector (LeCun, 1998) 42 Case study 2: sketch recognition 43 Case study 2: sketch recognition 44 Case study 3: autonomous driving Pomerleau, 1995 45 Case study 4: sketch beautification Orbay and Kara, 2011 46 Case study 4: sketch beautification 47 Case study 4: sketch beautification 48 Research forefront • Deep belief network – Critique, or classify – Create, synthesize Demo at: http://www.cs.toronto.edu/~hinton/adi/index.htm 49 In summary 1.Powerful machinery 2.Feed-forward 3.Back propagation 4.Design considerations 50