Extensiveness and Perceptions of Lecture Demonstrations in the

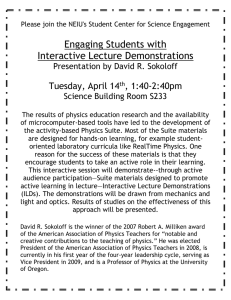

advertisement