CS 414 Review

advertisement

CS 414 Review

Operating System: Definition

Definition

An Operating System (OS) provides a virtual machine

on top of the real hardware, whose interface is more

convenient than the raw hardware interface.

Applications

OS interface

Operating System

Physical machine interface

Hardware

Advantages

Easy to use, simpler to code, more reliable, more secure, …

2

You can say: “I want to write XYZ into file ABC”

What is in an OS?

Applications

Quake

System Utils

Sql Server

Shells

Windowing & graphics

OS Interface

Operating

System

Services

Naming

Windowing & Gfx

Networking

Virtual Memory

Generic I/O

File System

Device Drivers

Access Control

Process Management

Memory Management

Physical m/c Intf

Interrupts, Cache, Physical Memory, TLB, Hardware Devices

Logical OS Structure

3

Crossing Protection Boundaries

• User calls OS procedure for “privileged” operations

• Calling a kernel mode service from user mode program:

– Using System Calls

– System Calls switches execution to kernel mode

User process

System Call

Trap

Mode bit = 0

Save Caller’s state

User Mode

Mode bit = 1

Resume process

Kernel Mode

Mode bit = 0

Return

Mode bit = 1

Execute system call

Restore state

4

What is a process?

• The unit of execution

• The unit of scheduling

• Thread of execution + address space

• Is a program in execution

– Sequential, instruction-at-a-time execution of a program.

The same as “job” or “task” or “sequential process”

5

Process State Transitions

interrupt

New

Exit

dispatch

Ready

Running

Waiting

Processes hop across states as a result of:

• Actions they perform, e.g. system calls

• Actions performed by OS, e.g. rescheduling

• External actions, e.g. I/O

6

Context Switch

• For a running process

– All registers are loaded in CPU and modified

• E.g. Program Counter, Stack Pointer, General Purpose Registers

• When process relinquishes the CPU, the OS

– Saves register values to the PCB of that process

• To execute another process, the OS

– Loads register values from PCB of that process

Context Switch

Process of switching CPU from one process to another

Very machine dependent for types of registers

7

Threads and Processes

• Most operating systems therefore support two entities:

– the process,

• which defines the address space and general process attributes

– the thread,

• which defines a sequential execution stream within a process

• A thread is bound to a single process.

– For each process, however, there may be many threads.

• Threads are the unit of scheduling

• Processes are containers in which threads execute

8

Schedulers

• Process migrates among several queues

– Device queue, job queue, ready queue

• Scheduler selects a process to run from these queues

• Long-term scheduler:

– load a job in memory

– Runs infrequently

• Short-term scheduler:

– Select ready process to run on CPU

– Should be fast

• Middle-term scheduler

– Reduce multiprogramming or memory consumption

9

CPU Scheduling

•

•

•

•

•

•

•

•

FCFS

LIFO

SJF

SRTF

Priority Scheduling

Round Robin

Multi-level Queue

Multi-level Feedback Queue

10

Race conditions

• Definition: timing dependent error involving shared state

– Whether it happens depends on how threads scheduled

• Hard to detect:

– All possible schedules have to be safe

• Number of possible schedule permutations is huge

• Some bad schedules? Some that will work sometimes?

– they are intermittent

• Timing dependent = small changes can hide bug

The Fundamental Issue: Atomicity

• Our atomic operation is not done atomically by machine

– Atomic Unit: instruction sequence guaranteed to execute indivisibly

– Also called “critical section” (CS)

When 2 processes want to execute their Critical Section,

– One process finishes its CS before other is allowed to enter

12

Critical Section Problem

• Problem: Design a protocol for processes to cooperate,

such that only one process is in its critical section

– How to make multiple instructions seem like one?

Process 1

CS1

Process 2

CS2

Time

Processes progress with non-zero speed, no assumption on clock speed

Used extensively in operating systems:

Queues, shared variables, interrupt handlers, etc.

13

Solution Structure

Shared vars:

Initialization:

Process:

...

...

Entry Section

Critical Section

Added to solve the CS problem

Exit Section

14

Solution Requirements

• Mutual Exclusion

– Only one process can be in the critical section at any time

• Progress

– Decision on who enters CS cannot be indefinitely postponed

• No deadlock

• Bounded Waiting

– Bound on #times others can enter CS, while I am waiting

• No livelock

• Also efficient (no extra resources), fair, simple, …

15

Semaphores

• Non-negative integer with atomic increment and decrement

• Integer ‘S’ that (besides init) can only be modified by:

– P(S) or S.wait(): decrement or block if already 0

– V(S) or S.signal(): increment and wake up process if any

• These operations are atomic

semaphore S;

P(S) {

while(S ≤ 0)

;

S--;

}

V(S) {

S++;

}

16

Semaphore Types

• Counting Semaphores:

– Any integer

– Used for synchronization

• Binary Semaphores

– Value 0 or 1

– Used for mutual exclusion (mutex)

Process i

Shared: semaphore S

P(S);

Init: S = 1;

Critical Section

V(S);

17

Mutexes and Synchronization

semaphore S;

Init: S = 1;

0;

P(S) {

while(S ≤ 0)

;

S--;

}

Process i

Process j

V(S) {

S++;

}

P(S);

P(S);

Code XYZ

Code ABC

V(S);

V(S);

18

Monitors

• Hoare 1974

• Abstract Data Type for handling/defining shared resources

• Comprises:

– Shared Private Data

• The resource

• Cannot be accessed from outside

– Procedures that operate on the data

• Gateway to the resource

• Can only act on data local to the monitor

– Synchronization primitives

• Among threads that access the procedures

19

Synchronization Using Monitors

• Defines Condition Variables:

– condition x;

– Provides a mechanism to wait for events

• Resources available, any writers

• 3 atomic operations on Condition Variables

– x.wait(): release monitor lock, sleep until woken up

condition variables have waiting queues too

– x.notify(): wake one process waiting on condition (if there is one)

• No history associated with signal

– x.broadcast(): wake all processes waiting on condition

• Useful for resource manager

• Condition variables are not Boolean

– If(x) then { } does not make sense

20

Types of Monitors

What happens on notify():

• Hoare: signaler immediately gives lock to waiter (theory)

– Condition definitely holds when waiter returns

– Easy to reason about the program

• Mesa: signaler keeps lock and processor (practice)

– Condition might not hold when waiter returns

– Fewer context switches, easy to support broadcast

• Brinch Hansen: signaler must immediately exit monitor

– So, notify should be last statement of monitor procedure

21

Deadlocks

Definition:

Deadlock exists among a set of processes if

– Every process is waiting for an event

– This event can be caused only by another process in the set

• Event is the acquire of release of another resource

One-lane bridge

22

Four Conditions for Deadlock

• Coffman et. al. 1971

• Necessary conditions for deadlock to exist:

– Mutual Exclusion

• At least one resource must be held is in non-sharable mode

– Hold and wait

• There exists a process holding a resource, and waiting for another

– No preemption

• Resources cannot be preempted

– Circular wait

• There exists a set of processes {P1, P2, … PN}, such that

– P1 is waiting for P2, P2 for P3, …. and PN for P1

All four conditions must hold for deadlock to occur

23

Dealing with Deadlocks

• Proactive Approaches:

– Deadlock Prevention

• Negate one of 4 necessary conditions

• Prevent deadlock from occurring

– Deadlock Avoidance

• Carefully allocate resources based on future knowledge

• Deadlocks are prevented

• Reactive Approach:

– Deadlock detection and recovery

• Let deadlock happen, then detect and recover from it

• Ignore the problem

– Pretend deadlocks will never occur

– Ostrich approach

24

Safe State

• A state is said to be safe, if it has a process sequence

{P1, P2,…, Pn}, such that for each Pi,

the resources that Pi can still request can be satisfied by

the currently available resources plus the resources held

by all Pj, where j < i

• State is safe because OS can definitely avoid deadlock

– by blocking any new requests until safe order is executed

• This avoids circular wait condition

– Process waits until safe state is guaranteed

25

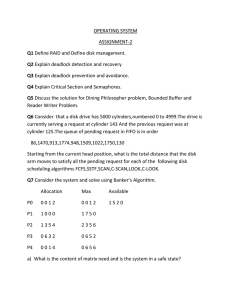

Banker’s Algorithm

• Decides whether to grant a resource request.

• Data structures:

n: integer

m: integer

available[1..m]

max[1..n,1..m]

allocation[1..n,1..m]

need[1..n,1..m]

# of processes

# of resources

available[i] is # of avail resources of type i

max demand of each Pi for each Ri

current allocation of resource Rj to Pi

max # resource Rj that Pi may still request

let request[i] be vector of # of resource Rj Process Pi wants

26

Basic Algorithm

1.

If request[i] > need[i] then

error (asked for too much)

2. If request[i] > available[i] then

wait (can’t supply it now)

3. Resources are available to satisfy the request

Let’s assume that we satisfy the request. Then we would have:

available = available - request[i]

allocation[i] = allocation [i] + request[i]

need[i] = need [i] - request [i]

Now, check if this would leave us in a safe state:

if yes, grant the request,

if no, then leave the state as is and cause process to wait.

27

Network Stack: Layering

Node A Application

Application

Presentation

Presentation

Transport

Transport

Network

Network

Data Link

Data Link

Physical

Physical

Network

Node B

28

End-to-End Argument

• What function to implement in each layer?

• Saltzer, Reed, Clarke 1984

– A function can be correctly and completely implemented only

with the knowledge and help of applications standing at the

communication endpoints

– Argues for moving function upward in a layered architecture

• Should the network guarantee packet delivery ?

– Think about a file transfer program

– Read file from disk, send it, the receiver reads packets and

writes them to the disk

29

Packet vs. Circuit Switching

• Reliability: no congestion, in-order data in circuit-switch

• Packet switching: better bandwidth use

• State, resources: packet switching has less state

– Good: less control plane processing resources along the way

– More data plane (address lookup) processing

• Failure modes (routers/links down)

– Packet switch reconfigures sub-second timescale

– Circuit switching: more complicated

• Involves all switches in the path

30

Link level Issues

•

•

•

•

Encoding: map bits to analog signals

Framing: Group bits into frames (packets)

Arbitration: multiple senders, one resource

Addressing: multiple receivers, one wire

31

Repeaters and Bridges

• Both connect LAN segments

• Usually do not originate data

• Repeaters (Hubs): physical layer devices

– forward packets on all LAN segments

– Useful for increasing range

– Increases contention

• Bridges: link layer devices

– Forward packets only if meant on that segment

– Isolates congestion

– More expensive

32

Network Layer

Two important functions:

• routing: determine path from source to dest.

• forwarding: move packets from router’s input to output

T1

T3

Sts-1

T3

T1

33

Two connection models

• Connectionless (or “datagram”):

– each packet contains enough information that routers can decide how

to get it to its final destination

b

A

b

• Connection-oriented (or “virtual circuit”)

B

C

– first set up a connection between two nodes

– label it (called a virtual circuit identifier (VCI))

– all packets carry label

A

1

1

1

B

C

34

DNS name servers

How could we provide this

service? Why not

centralize DNS?

single point of failure

traffic volume

Name server: process

running on a host that

processes DNS requests

local name servers:

distant centralized database

maintenance

doesn’t scale!

authoritative name server:

no server has all name-to-IP

address mappings

each ISP, company has

local (default) name server

host DNS query first goes

to local name server

can perform name/address

translation for a specific

domain or zone

35

Purpose of Transport layer

• Interface end-to-end applications and protocols

– Turn best-effort IP into a usable interface

• Data transfer b/w processes:

– Compared to end-to-end IP

• We will look at 2:

– TCP

– UDP

application

transport

network

data link

physical

network

data link

physical

network

data link

physical

network

data link

physical

network

data link

physical

network

data link

physical

application

transport

network

data link

physical

36

UDP

• Unreliable Datagram Protocol

• Best effort data delivery between processes

– No frills, bare bones transport protocol

– Packet may be lost, out of order

• Connectionless protocol:

– No handshaking between sender and receiver

– Each UDP datagram handled independently

37

UDP Functionality

• Multiplexing/Demultiplexing

– Using ports

• Checksums (optional)

– Check for corruption

application-layer

data

segment

header

segment

Ht M

Hn segment

P1

M

application

transport

network

P3

M

M

P4

application

transport

network

receiver

M

P2

application

transport

network

38

TCP

• Transmission Control Protocol

– Reliable, in-order, process-to-process, two-way byte stream

• Different from UDP

– Connection-oriented

– Error recovery: Packet loss, duplication, corruption, reordering

• A number of applications require this guarantee

– Web browsers use TCP

39

TCP Summary

• Reliable ordered message delivery

– Connection oriented, 3-way handshake

• Transmission window for better throughput

– Timeouts based on link parameters

• Congestion control

– Linear increase, exponential backoff

• Fast adaptation

– Exponential increase in the initial phase

40

Remote Procedure Call

• The basic model for Remote Procedure Call (RPC) was

described by Birrell and Nelson in 1980, based on work

done at Xerox PARC.

• Goal to make RPC as much like local PC as possible.

• Used computer/language support.

• There are 3 components on each side:

– a user program (client or server)

– a set of stub procedures

– RPC runtime support

41

Memory Management Issues

• Protection: Errors in process should not affect others

• Transparency: Should run despite memory size/location

gcc

Load Store

CPU

Translation box (MMU)

virtual

address

Physical

legal addr? address Physical

memory

Illegal?

fault

data

How to do this mapping?

42

Scheme 1: Load-time Linking

• Link as usual, but keep list of references

• At load time: determine the new base address

– Accordingly adjust all references (addition)

static a.out

0x3000

jump 0x2000

OS

0x6000

jump 0x5000

0x1000

0x4000

• Issues: handling multiple segments, moving in memory

43

Scheme 2: Execution-time Linking

• Use hardware (base + limit reg) to solve the problem

– Done for every memory access

– Relocation: physical address = logical (virtual) address + base

– Protection: is virtual address < limit?

OS

a.out

0x3000

MMU

Base: 0x3000

Limit: 0x2000

jump 0x2000

0x1000

a.out

jump 0x2000

0x6000

0x4000

– When process runs, base register = 0x3000, bounds register =

0x2000. Jump addr = 0x2000 + 0x3000 = 0x5000

44

Segmentation

• Processes have multiple base + limit registers

• Processes address space has multiple segments

– Each segment has its own base + limit registers

– Add protection bits to every segment Real memory

0x1000

0x3000

gcc

Text seg

r/o

0x5000 Stack seg

0x6000

0x2000

Base&Limit?

r/w

How to do the mapping?

0x8000

0x6000

45

Mapping Segments

• Segment Table

– An entry for each segment

– Is a tuple <base, limit, protection>

• Each memory reference indicates segment and offset

fault

Virtual addr

no

mem

yes

?

0x1000

3

128

+

Seg# offset

Seg table

Prot base

r

len

0x1000 512

seg

128

46

Fragmentation

• “The inability to use free memory”

• External Fragmentation:

– Variable sized pieces many small holes over time

• Internal Fragmentation:

– Fixed sized pieces internal waste if entire piece is not used

Word

??

gcc

External

fragmentation

emacs

allocated

stack

doom

Unused

(“internal

47

fragmentation”)

Paging

• Divide memory into fixed size pieces

– Called “frames” or “pages”

• Pros: easy, no external fragmentation

Pages

typical: 4k-8k

gcc

emacs

internal frag

48

Mapping Pages

• If 2m virtual address space, 2n page size

(m - n) bits to denote page number, n for offset within page

Translation done using a Page Table

Virtual addr

3

VPN

((1<<12)|128)

128 (12bits)

page offsetpage table

?

“invalid”

Prot VPN

r

3

PPN

1

mem

0x1000

seg

128

PPN

49

Paging + Segmentation

• Paged segmentation

– Handles very long segments

– The segments are paged

• Segmented Paging

– When the page table is very big

– Segment the page table

– Let’s consider System 370 (24-bit address space)

Seg #

(4 bits)

page # (8 bits)

page offset (12 bits)

50

What is virtual memory?

• Each process has illusion of large address space

– 232 for 32-bit addressing

• However, physical memory is much smaller

• How do we give this illusion to multiple processes?

– Virtual Memory: some addresses reside in disk

page table

disk

Physical memory

51

Virtual Memory

• Load entire process in memory (swapping), run it, exit

– Is slow (for big processes)

– Wasteful (might not require everything)

• Solutions: partial residency

– Paging: only bring in pages, not all pages of process

– Demand paging: bring only pages that are required

• Where to fetch page from?

– Have a contiguous space in disk: swap file (pagefile.sys)

52

Page Faults

• On a page fault:

– OS finds a free frame, or evicts one from memory (which one?)

• Want knowledge of the future?

– Issues disk request to fetch data for page (what to fetch?)

• Just the requested page, or more?

– Block current process, context switch to new process (how?)

• Process might be executing an instruction

– When disk completes, set present bit to 1, and current process in

ready queue

53

Page Replacement Algorithms

• Random: Pick any page to eject at random

– Used mainly for comparison

• FIFO: The page brought in earliest is evicted

– Ignores usage

– Suffers from “Belady’s Anomaly”

• Fault rate could increase on increasing number of pages

• E.g. 0 1 2 3 0 1 4 0 1 2 3 4 with frame sizes 3 and 4

• OPT: Belady’s algorithm

– Select page not used for longest time

• LRU: Evict page that hasn’t been used the longest

– Past could be a good predictor of the future

54

Thrashing

• Processes in system require more memory than is there

– Keep throwing out page that will be referenced soon

– So, they keep accessing memory that is not there

• Why does it occur?

– No good reuse, past != future

– There is reuse, but process does not fit

– Too many processes in the system

55

Approach 1: Working Set

• Peter Denning, 1968

– Defines the locality of a program

pages referenced by process in last T seconds of execution

considered to comprise its working set

T: the working set parameter

• Uses:

– Caching: size of cache is size of WS

– Scheduling: schedule process only if WS in memory

– Page replacement: replace non-WS pages

56

Working Sets

• The working set size is num pages in the working set

– the number of pages touched in the interval (t, t-Δ).

• The working set size changes with program locality.

– during periods of poor locality, you reference more pages.

– Within that period of time, you will have a larger working set size.

• Don’t run process unless working set is in memory.

57

Approach 2: Page Fault Frequency

• thrashing viewed as poor ratio of fetch to work

• PFF = page faults / instructions executed

• if PFF rises above threshold, process needs more memory

– not enough memory on the system? Swap out.

• if PFF sinks below threshold, memory can be taken away

58

Allocation and deallocation

• What happens when you call:

– int *p = (int *)malloc(2500*sizeof(int));

• Allocator slices a chunk of the heap and gives it to the program

– free(p);

• Deallocator will put back the allocated space to a free list

• Simplest implementation:

– Allocation: increment pointer on every allocation

– Deallocation: no-op

– Problems: lots of fragmentation

heap (free memory)

allocation

current free position

59

Memory Allocator

• What allocator has to do:

– Maintain free list, and grant memory to requests

– Ideal: no fragmentation and no wasted time

• What allocator cannot do:

a

– Control order of memory requests and frees

– A bad placement cannot be revoked

b

malloc(20)?

20

10

20

10

20

• Main challenge: avoid fragmentation

60

What happens on free?

• Identify size of chunk returned by user

• Change sign on both signatures (make +ve)

• Combine free adjacent chunks into bigger chunk

– Worst case when there is one free chunk before and after

– Recalculate size of new free chunk

– Update the signatures

• Don’t really need to erase old signatures

61

Design features

• Which free chunks should service request

– Ideally avoid fragmentation… requires future knowledge

• Split free chunks to satisfy smaller requests

– Avoids internal fragmentation

• Coalesce free blocks to form larger chunks

– Avoids external fragmentation

20 10

30

30

30

62

Malloc & OS memory management

• Relocation

– OS allows easy relocation (change page table)

– Placement decisions permanent at user level

• Size and distribution

– OS: small number of large objects

– Malloc: huge number of small objects

heap

stack

data

code

63

What does the disk look like?

64

Disk overheads

• To read from disk, we must specify:

– cylinder #, surface #, sector #, transfer size, memory address

• Transfer time includes:

– Seek time: to get to the track

– Latency time: to get to the sector and

– Transfer time: get bits off the disk

Track

Sector

Seek Time

Rotation

Delay

65

Disk Scheduling

•

•

•

•

•

•

FCFS

SSTF

SCAN

C-SCAN

LOOK

C-LOOK

66

RAID Levels

•

•

•

•

•

•

•

0: Striping

1: Mirroring

2: Hamming Codes

3: Parity Bit

4: Block Striping

5: Spread parity blocks across all disks

0+1 and 1+0

67

Stable Storage Algo

• Use 2 identical disks

– corresponding blocks on both drives are the same

• 3 operations:

– Stable write: retry on 1st until successful, then try 2nd disk

– Stable read: read from 1st. If ECC error, then try 2nd

– Crash recovery: scan corresponding blocks on both disks

• If one block is bad, replace with good one

• If both are good, replace block in 2nd with the one in 1st

68

File System Layout

• File System is stored on disks

– Disk is divided into 1 or more partitions

– Sector 0 of disk called Master Boot Record

– End of MBR has partition table (start & end address of partitions)

• First block of each partition has boot block

– Loaded by MBR and executed on boot

69

Implementing Files

• Contiguous Allocation: allocate files contiguously on disk

70

Linked List Allocation

• Each file is stored as linked list of blocks

– First word of each block points to next block

– Rest of disk block is file data

71

Using an in-memory table

• Implement a linked list allocation using a table

– Called File Allocation Table (FAT)

– Take pointer away from blocks, store in this table

72

I-nodes

• Index-node (I-node) is a per-file data structure

– Lists attributes and disk addresses of file’s blocks

– Pros: Space (max open files * size per I-node)

– Cons: what if file expands beyond I-node address space?

73

Implementing Directories

• When a file is opened, OS uses path name to find dir

– Directory has information about the file’s disk blocks

• Whole file (contiguous), first block (linked-list) or I-node

– Directory also has attributes of each file

• Directory: map ASCII file name to file attributes & location

• 2 options: entries have all attributes, or point to file I-node

74

Implementing Directories

• What if files have large, variable-length names?

• Solution:

– Limit file name length, say 255 chars, and use previous scheme

• Pros: Simple

Cons: wastes space

– Directory entry comprises fixed and variable portion

•

•

•

•

Fixed part starts with entry size, followed by attributes

Variable part has the file name

Pros: saves space

Cons: holes on removal, page fault on file read, word boundaries

– Directory entries are fixed in length, pointer to file name in heap

• Pros: easy removal, no space wasted for word boundaries

• Cons: manage heap, page faults on file names

75

Managing Free Disk Space

• 2 approaches to keep track of free disk blocks

– Linked list and bitmap approach

76

Backup Strategies

• Physical Dump

– Start from block 0 of disk, write all blocks in order, stop after last

– Pros: Simple to implement, speed

– Cons: skip directories, incremental dumps, restore some file

• No point dumping unused blocks, avoiding it is a big overhead

• How to dump bad blocks?

• Logical Dump

–

–

–

–

Start at a directory

dump all directories and files changed since base date

Base date could be of last incremental dump, last full dump, etc.

Also dump all dirs (even unmodified) in path to a modified file

77

File System Consistency

• System crash before modified files written back

– Leads to inconsistency in FS

– fsck (UNIX) & scandisk (Windows) check FS consistency

• Algorithm:

– Build 2 tables, each containing counter for all blocks (init to 0)

• 1st table checks how many times a block is in a file

• 2nd table records how often block is present in the free list

– >1 not possible if using a bitmap

– Read all i-nodes, and modify table 1

– Read free-list and modify table 2

– Consistent state if block is either in table 1 or 2, but not both

78

FS Performance

• Access to disk is much slower than access to memory

– Optimizations needed to get best performance

• 3 possible approaches: caching, prefetching, disk layout

• Block or buffer cache:

– Read/write from and to the cache.

79

Block Cache Replacement

• Which cache block to replace?

– Could use any page replacement algorithm

– Possible to implement perfect LRU

• Since much lesser frequency of cache access

• Move block to front of queue

– Perfect LRU is undesirable. We should also answer:

• Is the block essential to consistency of system?

• Will this block be needed again soon?

• When to write back other blocks?

– Update daemon in UNIX calls sync system call every 30 s

– MS-DOS uses write-through caches

80

LFS Basic Idea

• Structure the disk a log

– Periodically, all pending writes buffered in memory are collected

in a single segment

– The entire segment is written contiguously at end of the log

• Segment may contain i-nodes, directory entries, data

– Start of each segment has a summary

– If segment around 1 MB, then full disk bandwidth can be utilized

• Note, i-nodes are now scattered on disk

– Maintain i-node map (entry i points to i-node i on disk)

– Part of it is cached, reducing the delay in accessing i-node

• This description works great for disks of infinite size

81

LFS Cleaning

• Finite disk space implies that the disk is eventually full

– Fortunately, some segments have stale information

– A file overwrite causes i-node to point to new blocks

• Old ones still occupy space

• Solution: LFS Cleaner thread compacts the log

– Read segment summary, and see if contents are current

• File blocks, i-nodes, etc.

– If not, the segment is marked free, and cleaner moves forward

– Else, cleaner writes content into new segment at end of the log

– The segment is marked as free!

• Disk is a circular buffer, writer adds contents to the front,

cleaner cleans content from the back

82

FS Examples

•

•

•

•

•

•

•

DOS

Win98

WinXP

UNIX FS

Linux ext2FS

NFS, AFS, LFS

P2P FSes

83

Security in Computer Systems

• In computer systems, this translates to:

– Authorization

– Authentication

– Audit

• This is the Gold Standard for Security (Lampson)

• Some security goals:

–

–

–

–

Data confidentiality: secret data remains secret

Data integrity: no tampering of data

System availability: unable to make system unusable

Privacy: protecting from misuse of user’s information

84

Cryptography Overview

• Encrypt data so it only makes sense to authorized users

– Input data is a message or file called plaintext

– Encrypted data is called ciphertext

• Encryption and decryption functions should be public

– Security by obscurity is not a good idea!

85

Secret-Key Cryptography

• Also called symmetric cryptography

– Encryption algorithm is publicly known

– E(message, key) = ciphertext D(ciphertext, key) = message

• Naïve scheme: monoalphabetic substitution

–

–

–

–

Plaintext : ABCDEFGHIJKLMNOPQRSTUVWXYZ

Ciphertext: QWERTYUIOPASDFGHJKLZXCVBNM

So, attack is encrypted to: qzzqea

26! possible keys ~ 4x1026 possibilities

• 1 µs per permutation 10 trillion years to break

– easy to break this scheme! How?

• ‘e’ occurs 14%, ‘t’ 9.85%, ‘q’ 0.26%

86

Public Key Cryptography

• Diffie and Hellman, 1976

• All users get a public key and a private key

– Public key is published

– Private key is not known to anyone else

• If Alice has a packet to send to Bob,

– She encrypts the packet with Bob’s public key

– Bob uses his private key to decrypt Alice’s packet

• Private key linked mathematically to public key

– Difficult to derive by making it computationally infeasible (RSA)

• Pros: more security, convenient, digital signatures

• Cons: slower

87

Digital Signatures

• Hashing function hard to invert, e.g. MD5, SHA

• Apply private key to hash (decrypt hash)

– Called signature block

• Receiver uses sender’s public key on signature block

– E(D(x)) = x should work (works for RSA)

88

Authentication

• Establish the identity of user/machine by

– Something you know (password, secret)

– Something you have (credit card, smart card)

– Something you are (retinal scan, fingerprint)

• In the case of an OS this is done during login

– OS wants to know who the user is

• Passwords: secret known only to the subject

– Simplest OS implementation keeps (login, password) pair

– Authenticates user on login by checking the password

– Try to make this scheme as secure as possible!

• Display the password when being typed? (Windows, UNIX)

89

Salting Example

• If the hacker guesses Dog, he has to try Dog0001, …

• UNIX adds 12-bit of salt

• Passwords should be made secure:

– Length, case, digits, not from dictionary

– Can be imposed by the OS! This has its own tradeoffs

90

One time passwords

• Password lasts only once

– User gets book with passwords

– Each login uses next password in list

• Much easier approach (Lamport 1981)

– Uses one-way hash functions

User stores

uid, passwd

Server stores

uid, n, m, H= hm(passwd)

n=n-1

S = hn(password)

if(hm-n(S) == H)

then m=n;H=S;accept

else reject

91

Security Attacks & Defenses

• Attacks

–

–

–

–

–

–

–

Trojan Horses

Login spoofing

Logic bombs

Trapdoors

Buffer overflows

Viruses, worms

Denial of Service

• Defenses

– Virus Scanners

– Lures

– Intrusion Detection

92

Mobile Code Protection

• Can we place extension code in the same address

space as the base system, yet remain secure ?

• Many techniques have been proposed

–

–

–

–

SFI

Safe interpreters

Language-based protection

PCC

93

Encoding Security

• Depends on how a system represents the Matrix

– Not much sense in storing entire matrix!

– ACL: column for each object stored as a list for the object

– Capabilities: row for each subject stored as list for the subject

Cs414 grades

Cs415 grades

Emacs

Ranveer

r/w

r/w

Kill/resume

Tom

r

r/w

None

Mohamed

r

r

None

94

Protecting Capabilities

• Prevent users from tampering with capabilities

• Tagged Architecture

– Each memory word has extra bit indicating that it is a capability

– These bits can only be modified in kernel mode

– Cannot be used for arithmetic, etc.

• Sparse name space implementation

– Kernel stores capability as object+rights+random number

– Give copy of capability to the user; user can transfer rights

– Relies on inability of user to guess the random number

• Need a good random number generator

95

Protecting Capabilities

• Kernel capabilities: per-process capability information

– Store the C-list in kernel memory

– Process access capabilities by offset into the C-list

– Indirection used to make capabilities unforgeable

– Meta instructions to add/delete/modify capabilities

96

Protecting Capabilities

• Cryptographically protected capabilities

– Store capabilities in user space; useful for distributed systems

– Store <server, object, rights, f(object, rights, check)> tuple

– The check is a nonce,

• unique number generated when capability is created;

• kept with object on the server; never sent on the network

• Language-protected capabilities

– SPIN operating system (Mesa, Java, etc.)

97

Capability Revocation

• Kernel based implementation

– Kernel keeps track of all capabilities; invalidates on revocation

• Object keeps track of revocation list

– Difficult to implement

• Timeout the capabilities

– How long should the expiration timer be?

• Revocation by indirection

– Grant access to object by creating alias; give capability to alias

– Difficult to review all capabilities

• Revocation with conditional capabilities

– Object has state called “big bag”

– Access only if capability’s little bag has sth. in object’s big bag

98