Usability Study Design John C. Tang November 8, 2007

advertisement

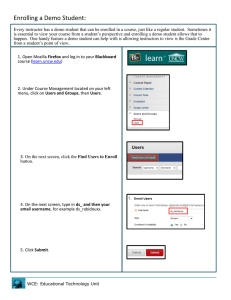

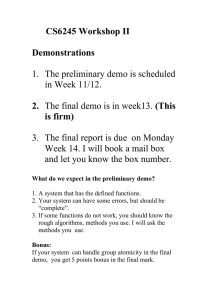

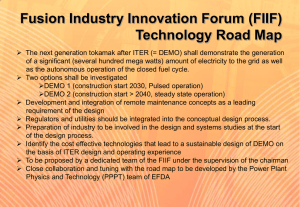

Usability Study Design John C. Tang November 8, 2007 New assignment! Travel to an unfamiliar country – Alone – Especially if you’re unfamiliar with the language – Record your first impressions (only kidding about being an assignment!) 2 Conceptual Model of a System Design Model – The model the designer has of how the system works System Image – How the system actually works – The structure and behavior of the system User’s Model – How the user understands how the system works 3 Mismatched conceptual models ? ? ? 4 Flying from Shanghai to Jinan 5 6 7 Hongqiao vs. Pudong airports 8 9 10 11 12 13 14 15 Current events OpenSocial APIs – Profile Information (user data) – Friends Information (social graph) – Activities (things that happen, news feed type stuff) 16 Focus on concepts, not implementation Current events (2) 17 Facebook ads – Business facebook pages – Spreading marketing messages through social connections – Allowing businesses to gather facebook activity data – Further diluting the meaning of “friend”? – Beware social phishing Today 18 Review usability study methods Apply to facebook applications Review actual usability study report Methods for evaluating system Qualitative – Rich, subjective – Exploring concepts Quantitative – Precise, objective, repeatable – Demonstrating claims 19 Critical incident technique 20 Noting significant events that affect, either positively or negatively, the user’s ability to accomplish a task Gather incidents over participants, look for trends, patterns, correlations Questionnaires & surveys Design questions with analysis in mind – Closed format more precise, easier to analyze Convert qualitativequantitative measures You give categories to users – Open-ended questions provide richer feedback, longer to analyze 21 Users give you categories Designing survey questions Multiple choice – Collecting information Ordinal ranking – Expressing relative preferences Likert scales – Expressing personal reactions 22 Likert scales 23 Ask users to rate on a numeric scale Odd number scale allows a neutral midpoint (5- or 7-point scale) Even number scale forces taking a position (4- or 6-point scale) “Anchors” give examples of points along the scale Semi-structured interviews Interactively asking questions (face-toface, telephone) Give users chance to explain “why” to complement “what” they did, subjective user’s viewpoint Can help with design questions – “What improvements would you suggest?” 24 Can be done individually or in groups Quantitative measures (comparison) Independent variables – Attributes we manipulate / vary in condition – Levels, value of attribute Dependent variables – Outcome of experiment, measures to evaluate – Usually measure user performance 25 Time to completion Errors Amount of production Measures of satisfaction Flow path tracking Experiment design (2) Control variables – Attributes that remain the same across conditions Random variables – Attributes that are randomly sampled – Can be used to increase generalizability 26 Avoiding confounds – Confounds are attributes that changed but were not accounted for – Confounds prevent drawing conclusions on independent variables Flow tracking 27 Track sequence of pages / steps visited to detect typical flow through application Look for opportunities to optimize, simplify flow Usage logging 28 Embed logging mechanisms into code Study usage in actual deployment facebook usage metrics Anonymous data from unknown users Could embed survey mechanism in application Combining methods 29 Usage logging for breadth Interviews for depth Rating perceptions on scales for measure Interview for understanding Cardinal rule Pilot test your study! – Feels like you’re throwing away data, but you’re saving time by ensuring proper data collection 30 Example: I recently lost data because usage logging mechanism not Go Stanford! sufficiently tested Recording the screen 31 Recording data for usability study Recording demo videos Camtasia by TechSmith (for Windows) http://techsmith.com/camtasia.asp Camtasia 32 Enables recording “video” of computer screen plus audio Software install on target computer Configure capture frame rate to ease loading on the computer 30-day free trial Camtasia demo 33 Evaluating facebook applications? 34 Measures of perception may be most meaningful Hard to find meaningful performance metrics Asking you to specify metrics you would monitor over long term deployment Popularity ≠ Usability facebook demos 35 New York Times quiz Scrabulous For your project Require aspects of both qualitative and quantitative methods – Qualitative How users react to application, perceptions – Quantitative How users perform on application, ratings What would you improve on next iteration? – Perhaps users’ perceptions of performance more important than actual values User study of at least number of members in group – Volunteer for other groups, but no mutual testing 36 Examples from Fall 2006: http://vis.berkeley.edu/courses/cs160fa06/wiki/index.php/Pilot_Usability_Study Consent form 37 If doing recording of data, have users sign a consent form Ask yourself, “If my data were being recorded, what would I want to know?” Contact info for questions What activity observed Who can access data 38 How data will be used What data collected How to Delete data Review before show publicly Project status Good work on checkpoint! Our feedback to you Feel free to seek out more input Work through platform stability issues – Use rhombus or pentagon – facebook pushes are Tuesday evening 39 Planning for the demo! Nov. 13 is demo of application – Intended to be implementation complete – Rest of time is for usability study and final presentation Tune demo to show best of application – Avoid ‘unseemly’ parts of application – Create story to show off best parts – Coding after demo should be limited 40 Presenting your work: demo 41 Tuesday, 10:30-12:00noon Wozniak Lounge (open starting at 10:00) Larger community invited (11:00) Dress business casual Refreshments! Demo Poster Demo Prepare, rehearse a demo ‘script’ – Introduce need, motivation – Walk them down a ‘garden path’ – Use this to prioritize development work Contingency plans – Screenshots (on poster, stored on machine) – Video demo (use Camtasia) 42 Poster 43 Template sent via email Need Screenshot(s) Indicator of design process Minimize text, Maximize interest Submit by Sunday, Nov. 11 by midnight to cs160@imail.eecs.berkeley.edu HelloWorld Application John Doe Jane Smith Mary Chung Lee Lin The Hottest Place to Greet the World! Goal: What need our project fulfills ie. What’s the problem I solve Look here’s a picture Callouts to show features What we did: What does the app do! Callouts to show features Ooh Details. Reasons why this is cool… Key design decisions? Why does it do what it does? Iterations of our Design: This is how we built it! In Summary: Our app encourages 44 social interaction! Additional Functionality See, click here to do this! Final Projects CS 160 Fall’07 User Interface Design, Prototyping, and Evaluation Applications f f f f f f f f AutoClub BestEats Birthday Card Blurbs Comic Avatar DrawIT Headhunters Pyramid Communications Hub f Size Me Up f Tag Your Friends f Travelogue Social Networking This term’s group project was to design and prototype a facebook application. Students identified user needs and created designs that leveraged social interactions. They implemented on the facebook platform. User study evaluation will be included in Final Presentation Dec. 4 & 6 Berkeley & IBM: the teaching team are volunteers from IBM Almaden Research Center and Berkeley TA’s 45 http://inst.eecs.berkeley.edu/~cs160/fa07/ John C. Tang, Christine Robson, David Sun, Bryan Tsao Grading criteria 46 Need: (Is need properly explained? Is this useful?) Flow through application steps: (Are steps through the activity clear? Is ordering logical?) Usability: (Ease of use, Visibility of affordances) Appearance: (Attractive? Good use of color? Fit with facebook?) Implementation: (Did everything work ok?) Presentation: (Did demo clearly explain application? Did poster clearly explain project?) Individual Assessments 47 Team dynamics are part of the course For each group member (including self) Rating from 1 (weak contribution) to 5 (strong contribution) Paragraph description per person Email to cs160@imail.eecs.berkeley.edu Usability study report 48