DISEMBODIED PERFORMANCE

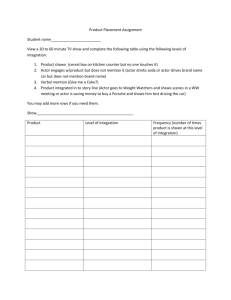

advertisement