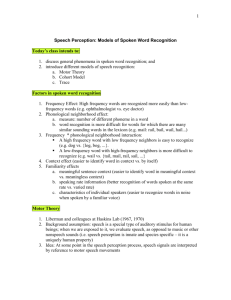

Speech Perception and Spoken Word Recognition: Past and Present

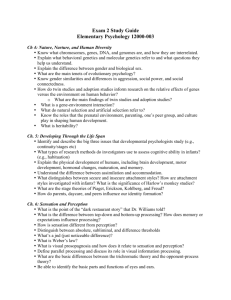

advertisement